MARS - Multicore Application Runtime System

Copyright 2008 Sony Corporation of America

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License".

DISCLAIMER

THIS DOCUMENT IS PROVIDED "AS IS," AND COPYRIGHT HOLDERS MAKE NO REPRESENTATIONS OR WARRANTIES, EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, NON-INFRINGEMENT, OR TITLE; THAT THE CONTENTS OF THE DOCUMENT ARE SUITABLE FOR ANY PURPOSE; NOR THAT THE IMPLEMENTATION OF SUCH CONTENTS WILL NOT INFRINGE ANY THIRD PARTY PATENTS, COPYRIGHTS, TRADEMARKS OR OTHER RIGHTS. COPYRIGHT HOLDERS WILL NOT BE LIABLE FOR ANY DIRECT, INDIRECT, SPECIAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF ANY USE OF THE DOCUMENT OR THE PERFORMANCE OR IMPLEMENTATION OF THE CONTENTS THEREOF.

Additional Resources

- Future releases and other information for MARS:

- Source repository for MARS:

- http://git.infradead.org/ps3/mars-src.git

- git://git.infradead.org/ps3/mars-src.git

- Send bug reports and other MARS inquiries to the cbe-oss-dev mailing list:

Table of Contents

- 1 General Concepts

- 2 MARS Concepts

- 3 Overview of Usage

- 4 Context Management

- 5 Mutex Management

- 6 Workload Model Management

- 7 Task Management

- 8 Task Synchronization

- 9 Task Tutorials

- 10 API Reference

1 General Concepts

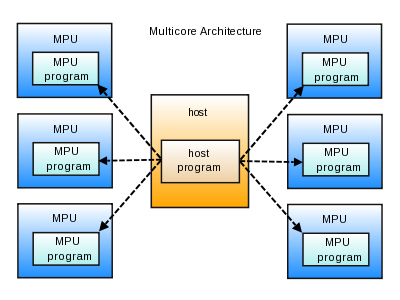

MARS (Multicore Application Runtime System) is a set of libraries that provides an API to easily manage and create user programs that will be scheduled to run on various microprocessing units of a multicore environment.MARS assumes a target multicore architecture where there is a single host processor (host) that is managing or controlling the execution of programs or processes on 1 or more separate microprocessing units (MPUs).

MARS assumes a target audience of application developers focusing on multicore architectures.

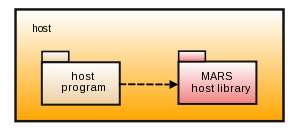

Fig. 1

1.1 Host Processor (host)

The host processor (host) is the processor on which the host program will be run.The host program is responsible for the initialization of all sub programs to be run on the various microprocessing units (MPU) available on the target multicore architecture.

The memory area accessible by the host processor will be referred to as the host storage.

- Note:

- In the Cell B.E. processor, the host processor is synonymous with the PPU.

- In the Cell B.E. processor, the host storage is synonymous with the PPU main storage.

1.2 Microprocessing Unit (MPU)

The microprocessing unit (MPU) is any one of many co-processors or DSPs of a multicore architecture that will be responsible for the execution of a sub program that may accomplish some form of processing or computation.The MPU program is the sub program that is initialized by the host program and executed on the MPU.

The MPU program should be in the ELF format. When the host program initializes the MPU program for execution, it will need to know the address of the MPU program ELF image in host storage. The procedures to get the MPU program ELF image loaded into host storage is platform independent and outside the scope of MARS.

The memory area accessible by the MPU will be referred to as the MPU storage.

- Note:

- In the Cell B.E. processor, the MPU is synonymous with the SPU.

- In the Cell B.E. processor, the MPU storage is synonymous with the SPU local storage.

- In the Cell B.E. processor, the MPU program ELF image is obtained through the libspe2 API.

1.3 Multicore Programming Limitations

When programming for a multicore architecture, the following limitations become apparent.(1) Memory Size of MPU Storage

First, the memory size of the MPU storage is limited. As each application processing gets more complex and the code sizes of MPU programs get larger, the size of MPU programs offloaded to the MPUs may exceed the physical memory size of the MPU storage.

If the size exceeds the memory size of the MPU storage, the offloaded MPU processing must be partitioned into smaller pieces of code in order to reduce the code size for each MPU program. As the result of this partitioning of code, some collaborative processing such as transferring computation results or waiting for processing completion between various MPU programs becomes necessary.

(2) Number of Physical MPUs

Second, the number of physical MPUs is limited. Although multi-MPU parallelization for many processing is required, the allowable number of MPU processors is limited. If application processing is multi-threaded and many different MPU programs are run simultaneously on the MPUs, the number of MPUs necessary for executing MPU programs will easily run out.

To run more MPU programs in parallel than there are physical number of MPUs available, we need a mechanism to switch currently running MPU programs depending on the situation. Also, if programs interact with each other like above (1), the program execution order should be considered when switching and running MPU programs.

Thus, a complex mechanism to control execution of MPU programs is required for applications where multi-MPU parallelization is needed.

1.4 Host Centric Programming Model

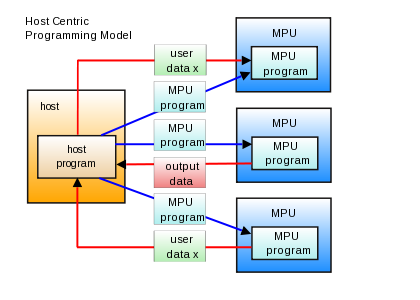

Many multicore applications use a host processor centric programming model. This means that the host processor is responsible for the loading/switching of MPU programs as well as the sending/receiving of necessary data to those MPU programs.Fig. 1.4

As shown in Fig. 1.4, when using such a host processor centric programming model, the host processor load becomes heavily utilized in the managing of all MPU program execution and control operations. Not only will this tie up the host processor from processing other tasks, but the MPU programs will also experience a decrease in performance as they wait for the host processor to finish managing of all MPU programs.

To have finer control over the execution of MPU programs by this host-centric approach, MPU control code (for loading/switching MPU programs or making interactions between MPU programs) in host programs become more complex. Furthermore, this results in the decreased performance of MPU programs due to the waiting for completion of host programs.

For example, if a host program is running, other host programs in this application may need to wait for their turn to be processed until the host program has been completed. This causes a delay in the loading/switching of MPU programs or sending/receiving data between MPU programs, and consequently MPU must wait idle even if it is free to run other programs during that idle wait time.

This is the result of controlling the execution of MPU programs via the host programs. If such host processing flow can be eliminated, and MPUs can directly send/receive data and load/switch MPU programs for execution, the MPUs can be used more efficiently.

1.5 MPU Centric Programming Model

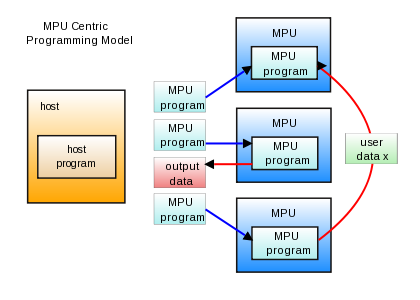

MARS provides a MPU centric programming model. The host processor is not responsible for the loading/switching of MPU programs and sending/receiving necessary data to those MPU programs. The individual MPUs are responsible for the loading and execution of MPU programs and switch out the MPU programs as necessary without the need of the host processor.Fig. 1.5

As shown in Fig. 1.5, loading/switching MPU programs and sending/receiving data is performed independently of the host program. In making the MPUs self managing, there is no longer a need to wait for the host processor to finish MPU management and thus increasing the MPU utilization and performance.

This also frees up the host processor from much of the MPU management. However the host processor is still responsible for some of the setup management necessary for MPU program execution. Other operations which cannot be performed by the MPU, such as file input/output must also be processed by the host program.

2 MARS Concepts

The MARS library is used to provide a runtime environment “Muticore Application Runtime System” that allows to run MPU programs in parallel on multiple MPUs.By using the MARS library, multiple MPU programs can be run cooperatively. This means that applications that run a large number of MPU programs one after another can be created without taking into account the physical number of MPUs available while leaving the reponsibility of efficiently switching MPU program execution up to the MARS library.

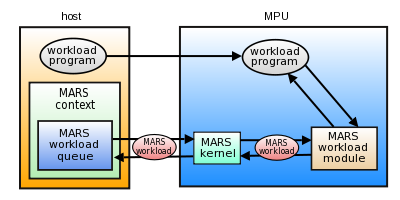

2.1 Kernel

The MARS kernel is what gets loaded on to each MPU storage and controls program execution on the MPUs. The kernel is responsible for the scheduling, loading, executing and loading parameters of the workloads on to the MPUs.The kernel is a relatively simple and small piece of code that stays resident on each MPU's storage area. Each kernel has its own scheduler that determines which workload to process. Based on the scheduled workload, the kernel will load the necessary MPU program to MPU storage and execute it.

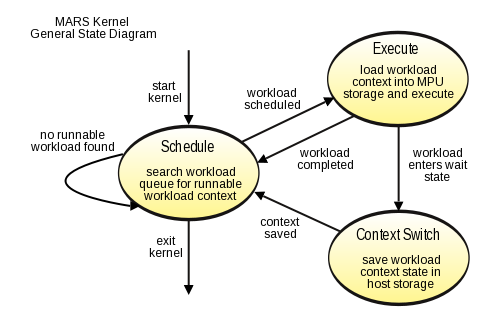

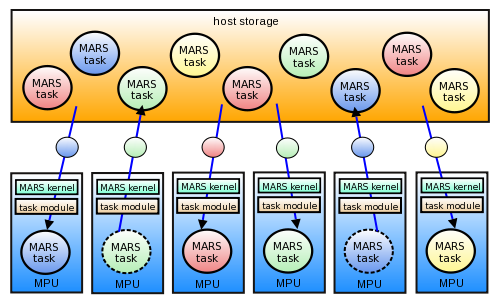

Fig. 2.1

As shown in Fig. 2.1, the kernel has 3 basic states of operation. Once loaded and started, the only reponsibility of the kernel is to search for a workload to schedule, jump execution to the MPU program of the workload, and context switch the workload if necessary, then return back to the scheduling state.

The kernel is a non-preemptive kernel and therefore workloads that are executed on each MPU will continue to run and use up the MPU's resources until it finishes execution or enters a wait state. When a workload enters a wait state, the kernel must handle the context switch. This involves saving the workload context into host storage for continued execution when the context is scheduled for execution at a later stage.

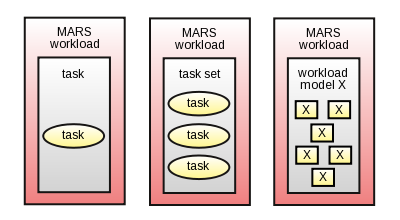

2.2 Workload Model

A workload model is the programming model that defines how MPU programs will be processed and synchronized with each other.A workload is the term used to refer to a single unit of an MPU program or multiple MPU programs that must be scheduled for execution on the MPUs. The actual design and behavior of how a workload will be processed after the workload is scheduled by the kernel will vary based on the workload model.

MARS aims to provide various workload models not specific to just one. Therefore an abstract MARS workload is necessary to accommodate various workload models.

One example of a workload model may be a single large process that is executed on a single MPU, while another example of a workload model may define a large number of small processes that are executed on various MPUs.

Fig. 2.2

- Note:

- Currently MARS only supports the MARS task model. Therefore, when we refer to the workload, it is synonymous with the MARS task. However, in the future the workload may refer to some arbitrary workload model not yet defined.

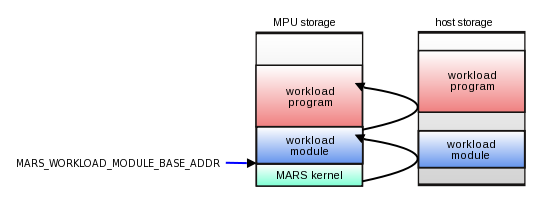

2.3 Workload Module

The MARS workload module is the initial MPU program executed by the MARS kernel when a workload is scheduled for execution and loaded to MPU storage. The main responsibility of the workload module is to load and process the necessary MPU program or programs as specified by the design of the workload model, update the state of the workload, and return execution back to the MARS kernel.Each workload model needs its own workload module implementation that handles the model specific processing of workloads. The workload module will make use of the module API provided by the MARS kernel. These kernel system calls are only accessible by the workload module. The interface between user programs and the workload module is left up to the workload model design.

Fig. 2.3

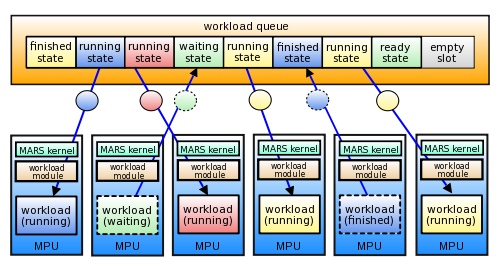

2.4 Workload Queue

The MARS workload queue is created and initialized at MARS context creation and resides in host storage. When workloads are created by the host program, they are stored in this queue.The MARS kernel is responsible for searching for a schedulable workload in this queue and when found it loads the workload into MPU storage for processing. Once the workload is loaded, the MARS kernel passes responsibility of workload processing to the workload module specified in the workload.

When a workload is scheduled by the kernel, the workload's state within the queue is set to a reserved state so no other kernel will attempt to schedule the same workload.

Since this queue is shared by both host and MPU, its access is protected by atomic operations.

Fig. 2.4

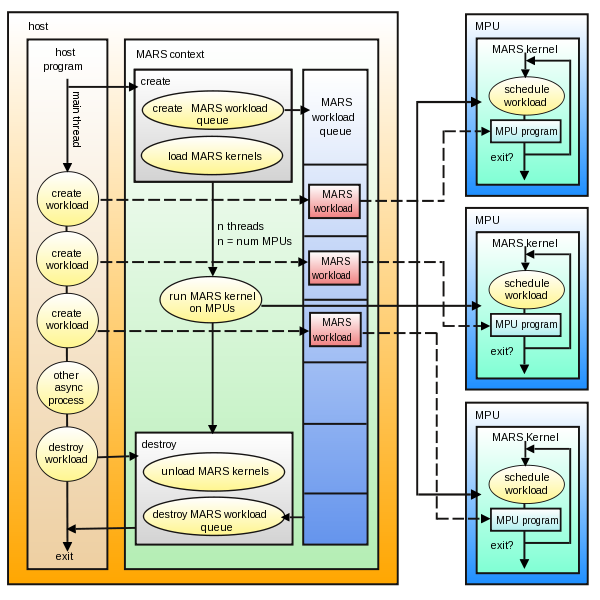

2.5 Context

The MARS context is what holds all necessary information and data for each MARS instance initialized for the system.Before any of the MARS functionalities can be utilized, an instance of a MARS context must be initialized. When the system is completely done with MARS functionality, the context must be finalized.

When a context is initialized within a system by the host processor, each MPU (depending on how many MPUs are initialized for the context) is loaded with the MARS kernel that stays resident in MPU storage and continues to run until the host processor finalizes the context.

The context also creates the workload queue in host storage. Each kernel, through the use of atomic synchronization primitives, will reserve and schedule workloads from this queue.

When the context is finalized, all kernels running on the MPUs are terminated and all resources are freed.

In a system, multiple MARS contexts may be initialized and the kernels and workloads of each context will be independent of each other. However, one of the main purposes of MARS is to avoid the high cost of process context switches within MPUs initiated by the host processor. If multiple MARS contexts are initialized, there will be an enormous decrease in performance as each MARS context is context switched in and out. In the ideal scenario, there should be a single MARS context initialized for the whole system.

Fig. 2.5

- Note:

- In Fig. 2.5, "other async process" means that the main program can perform other processes asynchronously while the MPU programs are being processed by the MARS kernels on the MPUs.

3 Overview of Usage

3.1 Host Library

The host program needs to make use of the host libraries provided by MARS.Depending on the target platform, MARS should install the necessary host headers and libraries to the appropriate host paths.

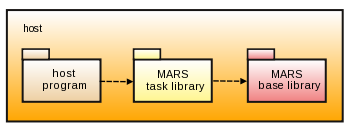

Fig. 3.1

In order to use any of the host processor library API, the user must include the necessary library API headers:

#include <mars/task.h> /* header for task workload library API */

The host program written for the host processor needs to link in the MARS host libraries.

MARS provides both static and dynamic libraries for the host processor.

The following are the libraries for the MARS base and task workload model:

libmars_base.a /* MARS base static library */ libmars_base.so /* MARS base dynamic library */ libmars_task.a /* MARS task static library */ libmars_task.so /* MARS task task dynamic library */

MARS provides these libraries for both 32-bit and 64-bit runtimes.

The actual procedure to compile a MARS host program and to link the MARS host library may vary depending on the target platform.

/* Example host 32-bit compile on Cell B.E. platform */ HOST_CC = ppu-gcc HOST_CFLAGS = -m32 $(HOST_CC) $(HOST_CFLAGS) host_prog.c -lspe2 -lmars_task -lmars_base /* Example host 64-bit compile on Cell B.E. platform */ HOST_CC = ppu-gcc HOST_CFLAGS = -m64 $(HOST_CC) $(HOST_CFLAGS) host_prog.c -lspe2 -lmars_task -lmars_base

- Note:

- Make sure the proper include and lib paths are set for the system.

- In the Cell B.E. compile example, libspe2 is linked in. This is required because libspe2 provides the MPU program ELF image. This may vary depending on the platform.

- Other workload model libraries other than the task libraries may be provided in the future. User implemented workload model libraries may also be used instead of the task libraries.

3.2 MPU Library

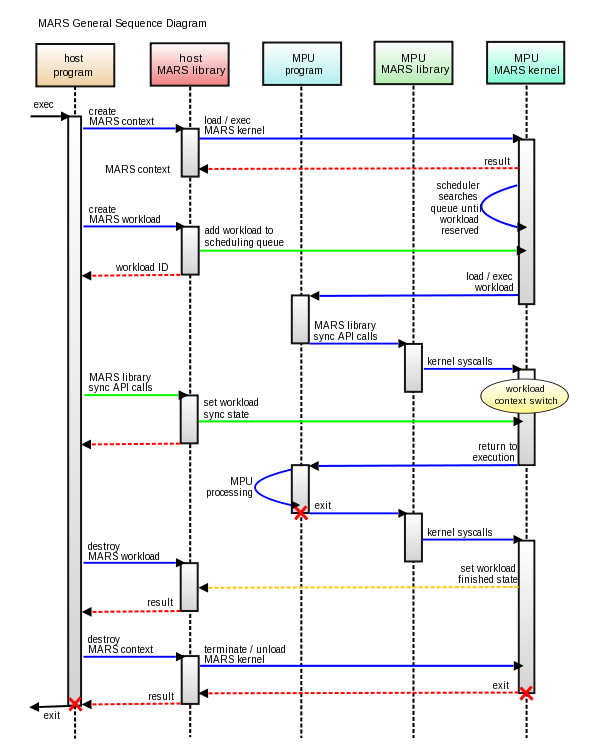

The MPU program needs to make use of the MPU library provided by MARS.Depending on the target platform, MARS should install the necessary MPU headers and libraries to the appropriate MPU paths.

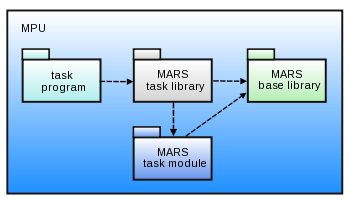

Fig. 3.2

The MPU programs written for the MPUs need to link in the MARS MPU library. In order to use any of the MPU library API, the user must include the necessary library API headers:

#include <mars/task.h> /* header for task workload library API */

The MPU program written for the MPU needs to link in the MARS MPU library.

MARS provides only a static library for the MPU.

The following are the libraries for the MARS base and task workload model:

libmars_base.a /* MARS base static library */ libmars_task.a /* MARS task static library */

When compiling the MPU programs, it is also necessary to specify the '.init' section to the workload base address specified for the workload model.

For example, a MARS task program should specify the task base address equal to MARS_TASK_BASE_ADDR (currently 0x4000).

The actual procedure to compile a MARS MPU program and to link the MARS MPU library may vary depending on the workload model and target platform.

/* Example MPU compile on Cell B.E. platform for task program */

MPU_CC = spu-gcc

MPU_LD_FLAGS = -Wl,-N -Wl,-gc-sections -Wl,--section-start,.init=0x4000

$(MPU_CC) $(MPU_LD_FLAGS) mpu_prog.c -lmars_task -lmars_base

- Note:

- Make sure the proper include and lib paths are set for the system.

- Other workload model libraries other than the task libraries may be provided in the future. User implemented workload model libraries may also be used instead of the task libraries.

3.3 General Sequence

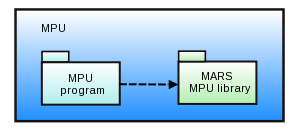

The general sequence of usage for MARS is described below:

1. Create a MARS context.

2. Create a MARS workload.

3. Process necessary synchronizations between host and MPU programs.

4. Process other host program tasks asynchronous to MPU processing.

5. Destroy the MARS workload instance (waits until MARS workload completion).

6. Destroy the MARS context.

Fig. 3.3

4 Context Management

4.1 Context Overview

A MARS context must be created before any base MARS functionalities can be utilized. The context creation should be the very first thing done by the host program.When all processing is completed, the host program must also be responsible for destroying the created MARS context.

- See also:

- Context Management API

4.2 Context Create

Typical MARS context creation code will look like below.

/* sample host processor side host_prog.c */ struct mars_context *mars_ctx; /* mars context pointer */ /* Create a MARS context */ int ret = mars_context_create(&mars_ctx, 0, 0); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* create failed */

Context creation parameters:

int mars_context_create( struct mars_context **mars, uint32_t num_mpus, uint8_t shared);

mars

This is the address of the pointer to MARS context. A MARS context will be allocated and its address stored in this pointer.

num_mpus

This is the number of MPUs you want utilized by this MARS context. The number of MPUs specified must be available by the system or an error is returned. You can specify 0 to have MARS utilize all the available MPUs for the context.

shared

This specifies if you are requesting a shared context. If you request a shared context, a global context is returned which can be shared by any libraries that your application links to that also request a shared context.

4.3 Context Destroy

You must properly destroy any MARS contexts you created to properly free up any resources used by MARS.

/* Destroy the MARS context previously created */ ret = mars_context_destroy(mars_ctx); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* destroy failed */

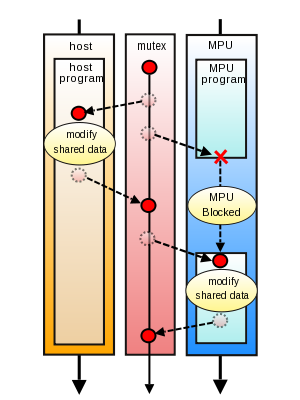

5 Mutex Management

5.1 Mutex Overview

A MARS mutex instance can be used to protect blocks of code from executing simultaneously whether it be in a host program or MPU program. This can be useful if some code in the host program or MPU program accesses some common resource, such as a global variable. If the block of code is protected by the MARS mutex, it is guaranteed that the protected block of code in the host program will not be executed simultaneously as any other host program thread or any other MPU program.The MARS mutex is independent of the MARS context or MARS workload model. A MARS mutex can be used in a host program without even creating a MARS context. A MARS mutex can also be used in an MPU program independent of any MARS workload model or API. However, an MPU program independent of any MARS workload model means the user will be responsible for the loading and execution of such a program and has close to no meaning with regards to the usage of MARS.

The MARS mutex does not call into the MARS kernel's scheduler. This means that when some entity attempts to lock a mutex that is already locked, the mutex will block execution of the entity until the lock can be obtained. For the MPU-side, this means that the MARS kernel can not schedule any other workloads while a MARS mutex is waiting to lock.

- Note:

- The use of mutexes should be mainly reserved for implementing the workload model layer. Access to the mutex API is limited to the workload module layer from the MPU-side. It is left up to the workload module whether or not to provide access to mutex routines through the workload model API.

If you want to make use of synchronization methods that call into the MARS kernel's scheduler and allow for other workloads to be scheduled during the time a synchronization object waits, refer to the synchronization methods provided by the various workload models.

Fig. 5.1

- See also:

- Mutex Management API

5.2 Mutex Create

Typical MARS mutex creation code will look like below.

/* sample host processor side host_prog.c */ struct mars_mutex *mutex; /* mars mutex pointer */ /* Create a MARS mutex */ int ret = mars_mutex_create(&mutex); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* create failed */

5.3 Mutex Usage

In order to protect code blocks from simultaneous execution on any host program thread or MPU, lock the mutex before the code block and unlock the mutex upon completion of the code block. The lock call will return immediately if no other entities have locked the mutex. Otherwise, the lock call will block until the mutex becomes unlocked and it is able to successfully lock the mutex for itself.

/* Lock the MARS mutex previously created */ ret = mars_mutex_lock(mutex); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* destroy failed */ /* critical code section */ /* Unlock the MARS mutex previously locked */ ret = mars_mutex_unlock(mutex); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* destroy failed */

- Note:

- The mutex API access on the MPU-side is limited to the workload module API (See Workload Module Management API ).

5.4 Mutex Destroy

You must properly destroy any MARS mutexes you created to properly free up any resources.

/* Destroy the MARS mutex previously created */ ret = mars_mutex_destroy(mutex); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* destroy failed */

6 Workload Model Management

6.1 Workload Model Overview

MARS provides the ability to support various workload models. The MARS base library provides the MARS kernel and all the APIs necessary to implement the workload model. The MARS base library and kernel on its own are only responsible for scheduling abstract workload contexts from the workload queue. In order for the workload context to be managed and executed, a workload module specific to each workload model must be provided.

- Note:

- Currently MARS provides the task workload model. However, more workload models may be implemented in the future. It is also possible for users to implement their own workload model and use it specifically for their own programs.

1. Workload Model Host Library

The host-side library of the workload model will provide the user with the interface to create workload contexts and add them to the workload queue so that the workload can be scheduled for execution by the MARS kernel.

It is the responsibility of this host-side library to populate the contents of the workload context structure with all the necessary information specific to the workload model design.

Fig. 6.1a

Fig. 6.1a, shows that for the task workload model, the host program depends on the MARS task host library and MARS base host library.

2. Workload Model MPU Library

The MPU-side library of the workload model will provide the user with the interface to handle any workload model specific functionalities.

It is the responsibility of this MPU-side library to handle any processing of the workload specific to the workload model design. This library will also need to call into the workload module implemented specifically for the workload model. It is left completely up to the design of each workload model as to what interfaces should be provided between the workload module and MPU-side library.

Fig. 6.1b

Fig. 6.1b, shows that for the task workload model, the MPU task program depends on the MARS task MPU library and MARS base MPU library.

3. Workload Model Module

The workload module is the MPU program that is loaded and executed by the MARS kernel when a specific workload context is scheduled and ready to be executed. Each workload context needs to know the corresponding workload module that will be responsible for the execution and mangement of the workload.

The workload module will remain resident in the MPU storage as long as the workload it is responsible for remains in the running state. Its main function is to load and execute the MPU program specified by the currently scheduled workload context. The workload module also serves as the communication layer between the user's workload specific MPU program and the MARS kernel.

- Note:

- The workload module must be run-complete, meaning once the workload module returns execution back to the MARS kernel, it will not resume execution. Each time the workload module runs, it begins execution from its entry point. If workloads must be resumed, it is the workload module's responsibility to save the workload's program state and store any necessary information into the workload context.

workload module entry

The entry point for the workload module must be mars_module_entry.

workload module base address

The workload module is loaded into MPU storage by the MARS kernel at the address specified by MARS_WORKLOAD_MODULE_BASE_ADDR. The size of the workload module varies for each workload model implementation. Therefore, each workload model will have the workload module load the workload program to a different address in MPU storage.

workload module stack

The stack symbol for the workload module stack must also be specified. The stack address should be immediately below the base address of the workload program that the workload module will load and execute.

Example of how to compile a workload module on a Cell B.E. platform:

/* Example MPU compile on Cell B.E. platform for workload module */

MPU_CC = spu-gcc

MPU_LD_FLAGS = -Wl,-N -Wl,-gc-sections \

-Wl,--entry,mars_module_entry -Wl,-u,mars_module_entry \

-Wl,--section-start,.init=0x3000 \

-Wl,--defsym=__stack=0x3ff0

$(MPU_CC) $(MPU_LD_FLAGS) workload_module.c -lmars_base

6.2 Workload Queue API (host)

The workload queue API is the interface between the MARS context and the MARS workload model host library. The host library of the workload model implementation will need to use the workload queue API in order to provide proper workload management functionalities.The workload queue API provides the basic funtions to create, schedule, remove a workload context within the workload queue. It also provides APIs to do signal handling of workloads and to wait for specific workloads to complete.

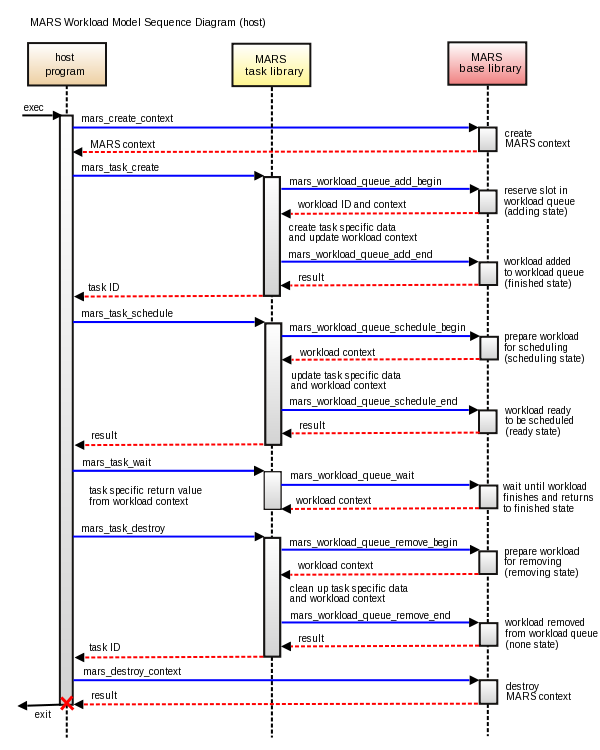

Fig. 6.2

Fig. 6.2 above shows a sample sequence of how the workload queue API can be used to implement the task workload model's host library.

- See also:

- Workload Queue Management API

6.3 Workload Module API (MPU)

The workload module API is the interface between the MARS kernel and the MARS workload module for the specified workload model. The workload specific module will need to use the workload module API in order to provide proper workload management functionalities.The workload module API provides the basic functions to get various workload information, schedule other workloads, handle workload signals, and also functions to transition the workload state and return execution back to the MARS kernel.

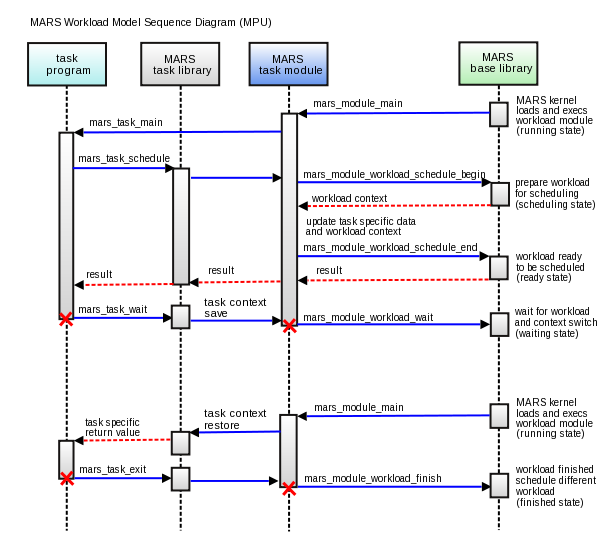

Fig. 6.3

Fig. 6.3 above shows a sample sequence of how the workload module API can be used to implement the task workload model's MPU library and task workload module.

- See also:

- Workload Module Management API

7 Task Management

7.1 Task Overview

The MARS task is one type of MARS workload model. The MARS task is a single execution of an MPU program that is scheduled to be run by the MARS kernel.Tasks can be used to run a small MPU program many times. However the primary usage of the task model is for large grained programs that take long amounts of time to process. Since tasks may occupy the MPU for a long time and prevent other workloads to be executed on that MPU, it has the ability to yield the MPU to other workloads.

The MARS task synchronization API also provides various methods that when used to wait for certain events, allows it to enter a wait state. When tasks have yielded or are waiting, the task state is saved into host storage and the MPU is freed up to process other available workloads.

Fig. 7.1

As shown in Fig. 7.1, the MARS kernel switches which MPU task programs are being executed on the MPUs. The kernel autonomously executes the tasks on the MPUs independently from the host. Whenever an MPU is free, the kernel will load any available task into the MPU storage for execution.

The general flow for using the MARS task is as follows:

1. (host) Prepare the task program ELF image in host storage.

2. (host) Create task instances.

3. (host) Schedule tasks for execution.

4. (task) Schedule sub tasks for execution.

5. (task) Wait for sub task completion.

6. (task) Resume execution when all sub tasks have completed.

7. (task) Process and finish task execution.

8. (host) Wait for all tasks to complete.

9. (host) Destroy all task instances.

- See also:

- Task Management API

7.2 Task Program

The MARS task program is the MPU program written for the MPU and is the actual code that will be executed when the task is run by the MARS kernel. Just as the host program is compiled using the host compiler, these MPU programs will be compiled using the MPU's compiler.The MARS task program must define the mars_task_main function, as that is the main entry point of the program. This function is what gets called when the kernel is ready to run the task.

A task program finishes execution when it calls mars_task_exit or returns from the mars_task_main function.

The arguments (mars_task_args) passed into the mars_task_main function is specified in the host program when calling mars_task_schedule to allow the task to be scheduled for execution. If no args are specified when calling mars_task_schedule, the args passed into the mars_task_main function is uninitialized and its state is undefined.

/* sample MPU side mpu_prog.c */ #include <stdio.h> #include <mars/task.h> int mars_task_main(const struct mars_task_args *task_args) { (void)task_args; printf("Hello World!\n"); return 0; }

7.3 Task Create

Typical MARS task creation code will look like below.

/* sample host processor side host_prog.c */ struct mars_context *mars_ctx; /* MARS context pointer */ struct mars_task_id task_id; /* MARS task id instance */ ... /* Assume MARS context is created as shown above */ ... /* Create the task instance */ int ret = mars_task_create(mars_ctx, &task_id, "Task", elf_image, 0); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* create failed */

MARS task creation will initialize a workload instance in the MARS context's workload queue.

The returned task id is returned to the user. The task id needs to be saved for management of the task.

Once a task is created, it must be scheduled for execution before it is ever executed by calling mars_task_schedule.

Any created tasks should be properly cleaned up with a call to mars_task_destroy when the task will no longer be scheduled for execution.

Task creation parameters:

int mars_task_create( struct mars_context *mars, struct mars_task_id *id, const char *name, const void *elf_image, uint32_t context_save_size);

mars

This is the pointer to a created MARS context.

id

This is the address of a task id instance that will be initialized upon successful task creation.

name

This specifies a string identifier for the task. The string length must be no longer than MARS_TASK_NAME_LEN_MAX.

elf_image

This specifies the address to the MPU program ELF image loaded into host storage. This MPU program needs to be a MARS task program.

context_save_size

The size of context save area to allocate on host storage to be used during a task context switch (See 7.5 Task Switching).

7.4 Task Execution

Typical MARS task execution code will look like below.

/* sample host processor side host_prog.c */ struct mars_task_args task_args; /* MARS task args */ /* Sets the task to a schedulable state */ ret = mars_task_schedule(&task_id, &task_args, 0); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* schedule failed */ /* Host processor can process something while the MPUs execute the tasks asynchronously. */ ... /* Blocks until the scheduled task has finished execution */ ret = mars_task_wait(&task_id, NULL); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* wait failed */

MARS task execution is done by scheduling a created task to be run by the MARS kernel. The MARS kernels running on the MPUs will automatically schedule it and load the task over to the MPU to begin execution.

While the MARS kernels process various workloads on the MPU side, the host is free to do any other processing asynchronous to any workload processing on the MPUs.

When the user chooses to do so, they can wait for a specific scheduled task to finish execution.

Any number of host threads or tasks can wait for a specific task to complete execution as long as it holds the task's id. However, the task being waited on should not be re-scheduled until all wait calls for the task have returned. Otherwise it is not guaranteed that all wait calls will return after the completion of the initial schedule call.

After a MARS task is created, it may be scheduled for execution any number of times until it is destroyed. However, a task can only be scheduled if it is not currently in the process of execution.

A MARS task that has been created by the host can be scheduled for execution by both the host and MPU-side APIs. The behavior of scheduling a task from host or MPU is identical in nature. If a task schedules a sub task for execution, and waits for the sub task to finish execution (assuming the use of a blocking wait call), it will yield its own execution until the sub task has completed. This allows for other workloads to be processed on the MPU that was executing the waiting task.

Task scheduling parameters:

int mars_task_schedule( struct mars_task_id *id, struct mars_task_args *args, uint8_t priority);

id

This is the pointer to the initialized task id of the task to be scheduled for execution.

args

This specifies the argument structure that will be passed into the task program's mars_task_main function. If NULL is specified for args, the args passed into the mars_task_main function is uninitialized and its state is undefined. You should specify NULL only if you are certain the task program will not access the args passed into mars_task_main function.

priority

This specifies the priority of the task. Task priorities range from 0 to 255, from lowest to highest priority. Higher priority tasks will be scheduled over lower priority tasks if both are available to be scheduled for execution.

7.5 Task Switching

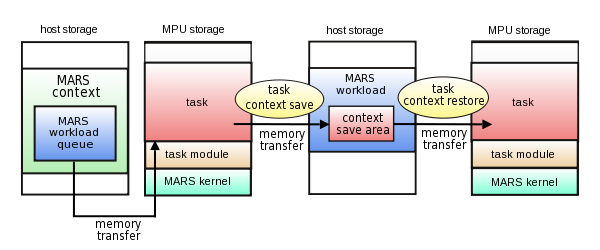

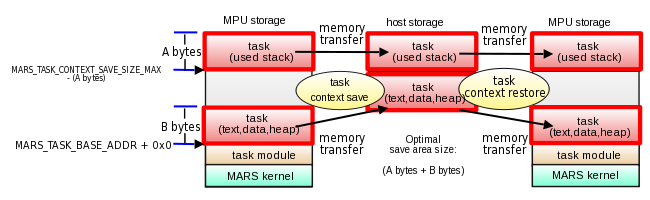

A MARS task switch occurs when a running task either yields or enters a waiting state due to a call to some blocking synchronization method. The task switch, performed by the MARS kernel, allows the current state of the task to be saved to a pre-allocated context save area on the host storage. The context save area is created during task creation depending on whether a context save area size is specified in the task parameters.When the task is no longer in a waiting state and is scheduled by the kernel to run again, the saved task context will be restored from the host storage back into MPU storage for resuming of task execution where it left off.

This task switching allows the kernel to schedule other workloads to be executed on the MPU without wasting valuable processing time while some tasks are left in a waiting state.

Fig. 7.5a

Limitations

It is important to note the limitations of a task switch:

1. A task is only capable of doing a task switch if it is created with a context save area (See mars_task_create). If no context save area is specified for the task, yield calls and any blocking calls that may put the task into a waiting state will result in error.

2. All MPU-side task API that may call into the MARS kernel scheduler to enter a waiting state is referred to as a Task Switch Call call in 10 API Reference. Before calling any MPU-side Task Switch Call, the user must be responsible to make sure that all memory transfer operations are completed. If there are incomplete memory transfer operations while a task switch occurs, the effects are undefined.

3. All MPU-side task API calls that internally handle memory transfers (*_begin/*_end) must not call any other MPU-side Task Switch Call in between the pair of *_begin and *_end calls. The reason for this limitation is the same as (2). The *_begin call, whether it be a Task Switch Call call or not, may begin a memory transfer. The memory transfer is not guaranteed to be completed until a paired *_end call.

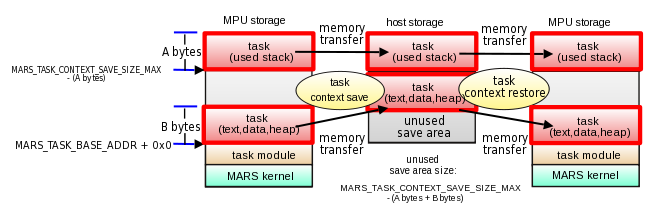

Context save size

When creating the task context, you must specify the size of the context save area that will be allocated and used during the task switch. By default, the task module will only save and restore the used areas of MPU storage necessary to perform the task switch.

You can specify one of the following for 'context_save_size' when creating the task with mars_task_create :

1. 0 - No context save area will be allocated. Use this to create a run complete task that never does a task switch

2. MARS_TASK_CONTEXT_SAVE_SIZE_MAX - maximum necessary area will be allocated for a context save. This option will always allocate the maximum area required for any task to context switch, regardless of whether all of the area will be necessary or not by the particular task being created.

Fig. 7.5b

3. user specified size - user can specify the size of the context save area necessary to task switch their specific task. For example, the task's text, data, heap and stack occupies only N bytes of MPU storage, context_save_size = N can be specified to avoid having to allocate MARS_TASK_CONTEXT_SAVE_SIZE_MAX bytes that would waste MARS_TASK_CONTEXT_SAVE_SIZE_MAX - N bytes of unused host storage space.

Fig. 7.5c

7.6 Task Destroy

You must properly destroy any MARS tasks you created to properly free up any resources used by it.

/* sample host processor side host_prog.c */ /* Destroy the task previously created */ ret = mars_task_destroy(&task_id); if (ret != MARS_SUCCESS) /* error checking */ return USER_DEFINED_ERROR; /* destroy failed */

MARS task destroy will cleanup the created task and finalize the workload instance in the workload queue. Once the task is destroyed, the task's resources will be freed.

This function should be called when the task will no longer be scheduled for execution by a call to mars_task_schedule. Once a task is destroyed, the task and task id will become obsolete.

8 Task Synchronization

8.1 Overview

The MARS Task Synchronization API provides various methods of synchronization between the host program running on the host processor and MARS tasks running on the MPUs, as well as between MARS tasks and other MARS tasks running across various MPUs.As described previously, enabling MARS tasks to send/receive data directly between each other independently of the host is the important factor in improving the usability and efficiency of MPUs. MARS provides various synchronization and communication functions which can make efficient interaction between MARS tasks or between MARS tasks and host programs.

The MARS Task Synchronization API provides the following types of synchronization objects:

(1) MARS Task Barrier

This is used to make multiple MARS tasks wait at a certain point in a program and to resume the task execution when all tasks are ready.

(2) MARS Task Event Flag

This is used to send event notifications between MARS tasks or between MARS tasks and host programs.

(3) MARS Task Queue

This is used to provide a FIFO queue mechanism for data transfer between MARS tasks or between MARS tasks and host programs.

(4) MARS Task Semaphore

This is used to limit the number of concurrent accesses to shared resources among MARS tasks.

(5) MARS Task Signal

This is used to signal a MARS task in the waiting state to change state so that it can be scheduled to continue execution.

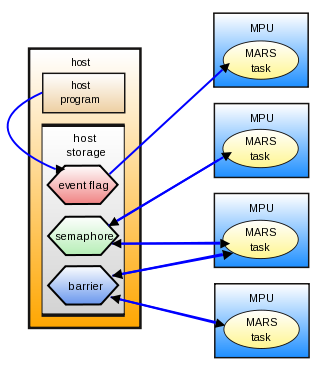

Fig. 8.1

As shown in Fig. 8.1, task synchronization instances are created in host storage. Both the host program and MPU program's MARS task access these instances resident on the host storage.

- See also:

- Task Synchronization API

8.2 Benefits

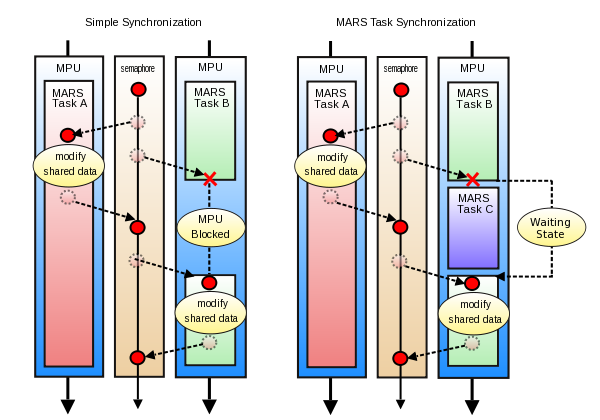

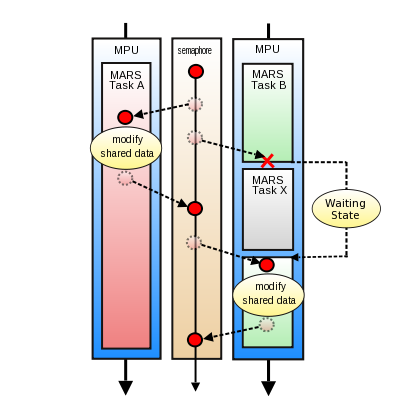

The MARS Task Synchronization API is specific to the MARS tasks. Many of the synchronization methods provided are blocking calls, meaning that when called and certain conditions are not met, the calling tasks will enter a waiting state and may result in a task switch (See 8.6 Task Semaphore).Fig. 8.2

In Fig. 8.2, the semaphore synchronization method is used as an example to show the benefit of using the MARS task synchronization over a simple synchronization method.

When using simple synchronization methods within a MARS task, if the synchronization method blocks, it will force the task to wait until the synchronization method allows for execution to resume. If a task must wait on some synchronization method for a very long time, the MPU executing the task will be forced to block without being able to process anything else during that time.

The MARS task synchronization methods prevent the wasting of valuable MPU processing time during the time a task blocks on some synchronization method. When a MARS task blocks on some synchronization method, the task itself will enter a waiting state. This allows for the MPU executing the task to do a task switch, allowing it to execute some other task that is not in a waiting state. Once the original task in the waiting state receives the synchronization event it was waiting for its state will be returned to a runnable state and will be scheduled for resumed execution when the MPU becomes available.

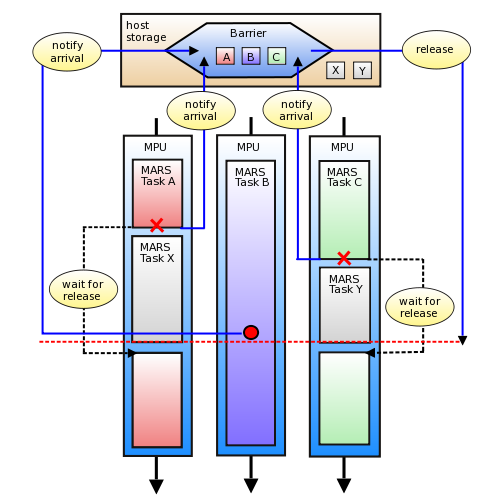

8.3 Task Barrier

The MARS task barrier allows the synchronization of multiple tasks. At barrier initialization, the total number of tasks that need to be synchronized is specified. When each task arrives at the barrier, it will notify the barrier and enter a waiting state until the barrier is released. When the total number of tasks specified at initialization have arrived at the barrier and notified it, the barrier is released and all tasks are returned to the ready state to be scheduled to run once again.The general flow for using the MARS task barriers is as follows:

1. (host) Allocate memory for task barrier structure.

2. (host) Create task barrier.

3. (host) Create tasks and schedule for execution.

4. (task) Process until synchronization point.

5. (task) Notify barrier of synchronization point arrival.

6. (task) Wait until all tasks notify barrier and barrier is released.

7. (task) Finish task execution.

8. (host) Wait for task completion and finalize tasks.

9. (host) Destroy task barrier and free allocated memory.

Fig. 8.3

In Fig. 8.3, there is a MARS task barrier created to wait on notifications from 3 separate tasks.

First, Task A reaches the synchronization point first and notifies the barrier. Since the barrier has not yet been released, it enters a wait state and yields the MPU to execute another Task X.

Next, Task C reach the synchronization point soon after and yields MPU execution to another Task Y after notifying the barrier. Finally, Task B reaches the synchronization point, at which point it notifies the barrier and the barrier is released.

Once the barrier is released, Task B continues with execution while both Tasks A and C are available to be scheduled for execution as soon as there is an available MPU.

- See also:

- Task Barrier API

8.4 Task Event Flag

The MARS task event flag allows the synchronization between multiple tasks and the host program by sending and receiving 32-bit event flags between one another.The event flags can be sent from host program to MARS task or vice versa, as well as between multiple MARS tasks. While waiting on certain event flags to be received, the task transitions to the waiting state until the event flag is received.

The general flow for using the MARS task event flag is as follows:

1. (host) Allocate memory for task event flag structure.

2. (host) Create task event flag.

3. (host) Create tasks and schedule for execution.

4. (task) Process until synchronization point.

5. (task) Wait until specified event flag bit is set.

6. (host or task) Set the specified event flag bit.

7. (task) Finish task execution.

8. (host) Wait for task completion and finalize tasks.

9. (host) Destroy task event flag and free allocated memory.

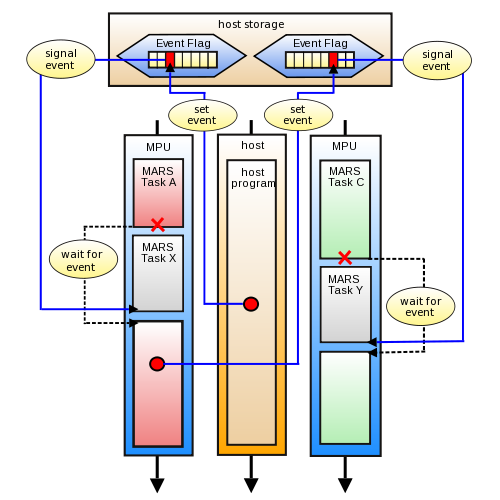

Fig. 8.4

In Fig. 8.4, there are 2 separate MARS event flags created. One event flag is created for host to MPU communication, while the other is created for MPU to MPU communication.

First, Task A reaches the synchronization point first and waits for a specific event flag bit to be set. As it waits for the event, it enters the wait state and yields execution of the MPU so that Task X can run.

Next, Task B reaches its synchronization point and allows for Task Y to run while it waits for the event.

Next, the host program sets the event flag bit Task A is waiting on, at which point Task A becomes available for resumed execution.

Finally, as Task A becomes scheduled and resumes execution it then sets the event flag bit Task B is waiting on, at which point Task B becomes available for resumed execution.

- See also:

- Task Event Flag API

8.5 Task Queue

The MARS task queue allows for sending and receiving of data between multiple MARS tasks and the host program.From either a host program or MARS task you can push data into the queue and also from either a host program or MARS task you can pop data out from the queue as soon as it becomes available.

The advantage of the MARS task queue is that when a MARS task requests to do a pop and no data is available yet to be received from the queue, the MARS task will enter a waiting state. As soon as data is available to be popped from the queue, the MARS task can be scheduled for resumed execution with the received data.

The general flow for using the MARS task queue is as follows:

1. (host) Allocate memory for task queue structure.

2. (host) Create task queue.

3. (host) Create tasks and schedule for execution.

4. (task) Process until synchronization point.

5. (task) Pop queue and wait until data is available.

6. (host or task) Push queue with data.

7. (task) Receive data and finish task execution.

8. (host) Wait for task completion and finalize tasks.

9. (host) Destroy task queue and free allocated memory.

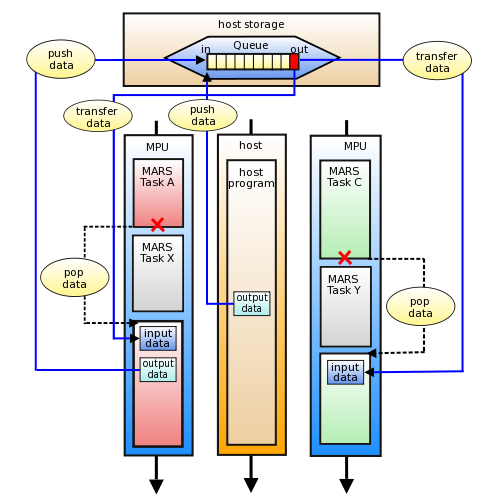

Fig. 8.5

In Fig. 8.5, there is a MARS queue instance is created to send and receive data between a host program and MARS tasks.

First, Task A reaches the synchronization point first where it requests to pop data from the queue. At this point in time, nobody has pushed data into the queue, and the queue is empty. This causes Task A to enter a wait state and yield MPU execution to Task X.

Next, Task B reaches its synchronization point and requests to pop data. Since the queue is still empty, it also enters the waiting state and yields MPU execution to another Task Y.

Next, the host program push some data into the queue, at which point Task A becomes available for resumed execution with the data from the host received.

Finally, as Task A becomes scheduled and resumes execution, it then pushes some other data into the queue, at which point Task B becomes available for resumed execution with the data from Task A received.

- See also:

- Task Queue API

8.6 Task Semaphore

The MARS task semaphore allows for synchronization between multiple tasks by preventing simultaneous access of some shared resource. The semaphore can be specified with how many simultaneous tasks can access the semaphore at any given time.Whenever a task wants to access some semaphore protected shared resource, it must first request to acquire the semaphore access (P operation) of the semaphore. When done accessing the shared resource it must then release access (V operation) of the semaphore. If attempting to request a a semaphore and other tasks have already requested the total number of allowed accesses, the task will transition to the waiting state until some other tasks release the semaphore and access is obtained.

The general flow for using the MARS task semaphore is as follows:

1. (host) Allocate memory for task semaphore structure.

2. (host) Create task semaphore.

3. (host) Create tasks and schedule for execution.

4. (task) Process until synchronization point.

5. (task) Acquire sempahore and wait until semaphore is obtained.

6. (task) Modify shared resource data.

7. (task) Release semaphore and finish execution.

8. (host) Wait for task completion and finalize tasks.

9. (host) Destroy task semaphore and free allocated memory.

Fig. 8.6

In Fig. 8.6, there is a MARS semaphore created to be shared between 2 MARS tasks. This semaphore is used to prevent simultaenous access of some shared data in the host storage.

First, Task A reaches the synchronization point first where it requests to acquire the semaphore. Since no other task holds the semaphore, Task A successfully acquires the semaphore without having to wait. It then continues execution to modify some shared data in the host storage.

Next, Task B reaches the synchronization point where it requests to acquire the same semaphore to modify the same shared data in host storage. At the time of the request to acquire the semaphore, Task A still holds the semaphore, causing Task B to enter a waiting state. As Task B is waiting, it yields MPU execution to another Task X.

Next, Task A completes modifying the shared data in host storage and releases the semaphore. This allows Task B to become available for resumed execution.

Finally, as Task B becomes scheduled for resumed execution, it continues to modify the shared data in host storage. Task B then releases the semaphore when access to the shared data is complete.

- See also:

- Task Semaphore API

8.7 Task Signal

The MARS task signal is the simplest form of synchronization between a host program and multiple MARS tasks.From either a host program or MARS task you can specify a certain task to signal. When the task waits for a signal to be received it will be transitioned to the waiting state until the signal is received.

The general flow for using the MARS task signal is as follows:

1. (host) Create tasks and schedule for execution.

2. (task) Process until synchronization point.

3. (task) Wait for signal.

4. (host or task) Send signal to the waiting task.

5. (task) Resume and finish execution.

6. (host) Wait for task completion and destroy tasks.

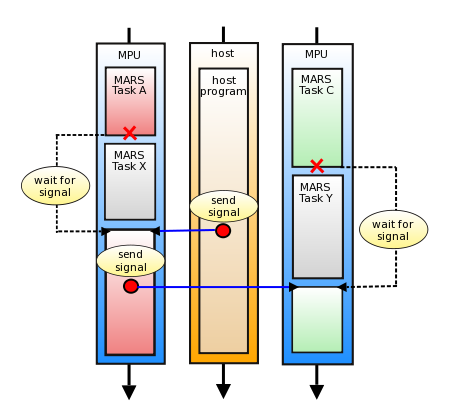

Fig. 8.7

In Fig. 8.7, there is a host program using signals to synchronize execution between 2 MARS tasks.

First, Task A reaches the synchronization point first where it waits on a signal. At this point in time, nothing has signalled Task A and causes it to enter a wait state and yield MPU execution to Task X.

Next, Task B reaches its synchronization point and waits on a signal. Since nothing has signalled Task B, it also enters the waiting state and yields MPU execution to another Task Y.

Next, the host program sends a signal to Task A, at which point Task A becomes available for resumed execution.

Finally, as Task A becomes scheduled and resumes execution, it signals Task B, at which point Task B becomes available for resumed execution.

- See also:

- Task Signal API

9 Task Tutorials

This section gives various code tutorials on the basic usage of the MARS Task and MARS Task Synchronization API.

- Note:

- The code in the tutorial is simplified for ease of explanation. Please refer to the acutal code provided in the MARS Samples package for the full implementations.

- The code in the tutorial does not properly check return values for all calls into the MARS API to simplify the coding examples. In your actual code, all return values to every MARS API function call should be checked accordingly.

- The code in the tutorial is platform independent. Any platform specific implementation details is either not shown, or a generic placeholder is used in place of platform specify code. Please refer to the specific code explanation for the details.

- All the tutorials assume knowledge from other sections of this documentation and the detailed explanation of previous tutorials within this section.

9.1 Task Execution from Host

This tutorial will explain how to prepare and schedule a MARS task for execution from the host program.The sample code creates and schedules a task that prints that prints "Hello!" to stdout and exits.

(host program)

1 #include <mars/task.h> 2 3 static void *task_program_elf_image; 4 5 int main(void) 6 { 7 struct mars_context *mars_ctx; 8 struct mars_task_id task_id; 9 int task_exit_code; 10 11 mars_context_create(&mars_ctx, 0, 0); 12 mars_task_create(mars_ctx, &task_id, "Task", task_program_elf_image, 0); 13 mars_task_schedule(&task_id, NULL, 0); 14 mars_task_wait(&task_id, &task_exit_code); 15 mars_task_destroy(&task_id); 16 mars_context_destroy(mars_ctx); 17 18 return 0; 19 }

| Line:1 | Include the header file "mars/task.h" necessary for utilizing the MARS task library.

|

| Line:3 | Pointer to the task program's ELF image in host storage. The procedure to load the task program into host storage is platform specific. Therefore, the code to do so is not shown anywhere in this sample code.

|

| Line:7 | Declare the MARS context pointer.

|

| Line:8 | Declare the structure for storing the MARS task id.

|

| Line:9 | Declare the instance to store the task exit code.

|

| Line:11 | Create the MARS context instance. int mars_context_create ( arg1: This is the address of the pointer to MARS context declared at Line:7. A MARS context will be created and its address stored in this pointer. arg2: This is the number of MPUs you want utilized by this MARS context. The number of MPUs specified must be available by the system or an error is returned. Here 0 is specifified to have MARS utilize all the available MPUs for the context. arg3: This specifies if you are requesting a shared context. Here 0 is specified since we do not require sharing the MARS context for this sample. return: MARS_SUCCESS is returned on success and a negative error value otherwise. )

|

| Line:12 | Create the MARS task instance. int mars_task_create ( arg1: Pass in the MARS context pointer. arg2: Pass in the pointer to MARS task id structure declared at Line:8. Upon successful completion, the task id will be initialized as required. arg3: Specify the NULL terminated string name of the task you want to create. arg4: Specify the address of the task program's ELF image that is loaded into host storage. The task program specified here is what will be loaded into MPU storage for execution when this task is scheduled to run by the MARS kernel. arg5: Specify the context save area size for this task. Since this task will not task switch, we do not need to specify a context save size so specify 0. Otherwise, if we want to create a task that can task switch we must specify a context save size or specify MARS_TASK_CONTEXT_SAVE_SIZE_MAX. return: MARS_SUCCESS is returned on success and a negative error value otherwise. )

|

| Line:13 | Schedule the task for execution. int mars_task_schedule ( arg1: Pass in the pointer to the task id initialized at Line:12. arg2: Pass in the pointer to the task arg structure we want to pass into the task program's mars_task_main function. For this sample we do not need to pass any args into the task program so specify NULL. arg3: Pass in the value for the scheduling priority this task. Since we only schedule 1 task for execution, the scheduling priority has no effect in this example. return: MARS_SUCCESS is returned on success and a negative error value otherwise. )

|

| Line:14 | Wait for the completion of the task. int mars_task_wait ( arg1: Pass in the pointer to the task id we want to wait for. arg2: Pass in the address of the variable to store the task exit code declared at Line:9. return: MARS_SUCCESS is returned on success and a negative error value otherwise. ) This call will block until the task previously scheduled in Line:13 completes execution. If we want to process some other tasks in the host program while waiting for the task to complete, we can do so before calling wait. Similarly, a non-blocking wait function mars_task_try_wait is also provided to poll for task completion.

|

| Line:15 | Destroy the completed task. int mars_task_destroy ( arg1: Pass in the pointer to the task id we want to destroy. return: MARS_SUCCESS is returned on success and a negative error value otherwise. ) We can only call this function when we are sure the task has finished. In this example we are sure of completion because we properly waited for task completion in Line:18. After the task is destroyed, we can no longer schedule this task for execution.

|

| Line:16 | Destroy the MARS context. int mars_context_destroy ( arg1: Pass in the pointer to the MARS context we want to finalize. return: MARS_SUCCESS is returned on success and a negative error value otherwise. ) This unloads all running MARS kernels from the MPUs and handles any necessary cleanup for the MARS library. No more MARS API calls can be made after this function until the MARS context is created once again.

|

(task program)

1 #include <stdio.h> 2 #include <mars/task.h> 3 4 int mars_task_main(const struct mars_task_args *task_args) 5 { 6 (void)task_args; 7 8 printf("MPU(%d): %s - Hello!\n", 9 mars_task_get_kernel_id(), mars_task_get_name()); 10 11 return 0; 12 }

| Lines:1-2 | Include the header file "stdio.h" for printf and "mars/task.h" necessary for utilizing the MARS task library.

|

| Line:6 | Since we specified NULL for the task args in Line:13 of the host program above, the state of task_args is undefined. In this program we do not and should not access the task_args.

|

| Lines:8-9 | Print out message to stdout. The calls to mars_task_get_kernel_id returns the id of the kernel that the current task is running on. The calls to mars_task_get_name return the string name of the current running task specified during task creation at Line:12 of the host program above.

|

| Line:11 | Returning from mars_task_main completes execution of the task. This will signal anything waiting for this task's completion to resume execution. In this example, the host program's call to mars_task_wait in Line:14 will return. Equivalent to returning from mars_task_main, we can also call mars_task_exit. The return value will be returned to the host program in the variable passed into mars_task_wait.

|

9.2 Task Execution from MPU

This tutorial will explain how to create and schedule a MARS task for execution from another task program.The sample code creates 3 separate task instances. One instance of the main task 1 program is created and 2 instances of a sub task 2 program is created.

The first main task is scheduled for execution by the host. The main task then schedules 2 instances of the sub task for execution using the sub task's id's specified by the arguments passed in by the host during scheduling.

Each instance of the sub task will print out "Hello!" and a unique value specified by the arguments passed in by the main task during scheduling.

(host program)

1 #include <stdio.h> 2 #include <mars/task.h> 3 4 #define NUM_SUB_TASKS 2 5 6 static void *task1_program_elf_image; 7 static void *task2_program_elf_image; 8 9 int main(void) 10 { 11 struct mars_context *mars_ctx; 12 struct mars_task_id task1_id; 13 struct mars_task_id task2_id[NUM_SUB_TASKS]; 14 struct mars_task_args task_args; 15 int i; 16 17 mars_context_create(&mars_ctx, 0, 0); 18 19 mars_task_create(mars_ctx, &task1_id, "Task 1", task1_program_elf_image, MARS_TASK_CONTEXT_SAVE_SIZE_MAX); 20 21 for (i = 0; i < NUM_SUB_TASKS; i++) { 22 char name[16]; 23 sprintf(name, "Task 2.%d", i); 24 mars_task_create(mars_ctx, &task2_id[i], name, task2_program_elf_image, 0); 25 } 26 27 task_args.type.u64[0] = mars_ptr_to_ea(&task2_id[0]); 28 task_args.type.u64[1] = mars_ptr_to_ea(&task2_id[1]); 29 30 mars_task_schedule(&task1_id, &task_args, 0); 31 mars_task_wait(&task1_id, NULL); 32 mars_task_destroy(&task1_id); 33 34 for (i = 0; i < NUM_SUB_TASKS; i++) 35 mars_task_destroy(&task2_id[i]); 36 37 mars_context_destroy(mars_ctx); 38 39 return 0; 40 }

| Line:13 | Declare an instance of the task id structure for each sub task we want to create and schedule.

|

| Lines:19 | Create the main task instance with the ELF image of task program 1. The main task needs to provide a context save area in order to allow for context switching while waiting for sub task completion. Specify MARS_TASK_CONTEXT_SAVE_SIZE_MAX for the context save area size so a context save area is initialized for the main task context.

|

| Lines:21-25 | Create the 2 sub task instances with the ELF image of task program 2. The sub task does not need to do a context switch so no context save area size needs to specified.

|

| Lines:27-28 | The main task needs to know the addresses of the task ids it plans to schedule for execution. Store each sub task id address into the task args passed into the main task's mars_task_main function.

|

| Line:30 | Schedule the main task for execution. Pass in the task args we initialized with the sub task id addresses at Lines:27-28. Since we only schedule 1 main task for execution, and the main task is waiting when any one of its sub task's is being executed, the scheduling priority specified has no effect in this example.

|

| Line:31 | Wait for the completion of the main task.

|

| Line:32 | Destroy the completed main task.

|

| Line:34-35 | Destroy the completed sub tasks also.

|

(task 1 program)

1 #include <mars/task.h> 2 3 int mars_task_main(const struct mars_task_args *task_args) 4 { 5 struct mars_task_id task2_0_id; 6 struct mars_task_id task2_1_id; 7 struct mars_task_args args; 8 9 get(&task2_0_id, task_args->type.u64[0], sizeof(task2_0_id)); 10 get(&task2_1_id, task_args->type.u64[1], sizeof(task2_1_id)); 11 12 args.type.u32[0] = 123; 13 mars_task_schedule(&task2_0_id, &args, 0); 14 15 args.type.u32[0] = 321; 16 mars_task_schedule(&task2_1_id, &args, 0); 17 18 mars_task_wait(&task2_0_id, NULL); 19 mars_task_wait(&task2_1_id, NULL); 20 21 return 0; 22 }

| Line:3 | Since the task args were passed into mars_task_schedule at Line:30 of the host program, task_args is pointing to an initialized mars_task_args structure.

|

| Line:5 | Declare an instance to store the task id of the first sub task to execute.

|

| Line:6 | Declare an instance to store the task id of the second sub task to execute.

|

| Line:7 | Declare an instance or the task arg structure we want to initialize with unique IDs to pass into the sub tasks.

|

| Lines:9-10 | Memory transfer from host storage to MPU storage the task id structures of the initialized sub tasks. The host storage addresses of these task id structures were specified at Lines:27-28 of the host program. The function "get" shown here is a generic place holder for the platform specific function to do the memory transfer. Please refer to your platform specific API to learn how to do the memory transfer from host storage to MPU storage on your specific platform.

|

| Lines:12-13 | Initialize the task args structure with a unique value. Schedule the first sub task instance using the task id obtained at Line:9. Pass in the task args and priority of 0.

|

| Lines:15-16 | Initialize the task args structure with a unique value. Schedule the second sub task instance using the task id obtained at Line:10. Pass in the task args and priority of 0.

|

| Lines:18-19 | Wait for the completion of both sub tasks. If the first sub task has not finished execution by the time of the call to mars_task_wait at Line:18, this main task will enter a wait state and its context will be switched out. When the first sub task completes execution, this main task will resume execution and continue on to wait for the second sub task to complete. Similarly, at the time of the call to mars_task_wait at Line:19, if the second sub task has not yet completed it will enter a wait state once again until completion of the second sub task.

|

(task 2 program)

1 #include <stdio.h> 2 #include <mars/task.h> 3 4 int mars_task_main(const struct mars_task_args *task_args) 5 { 6 printf("MPU(%d): %s - Hello! (%d)\n", 7 mars_task_get_kernel_id(), mars_task_get_name(), 8 task_args->type.u32[0]); 9 10 return 0; 11 }

| Line:4 | Since the task args were passed into mars_task_schedule at Line:13 and Line:16 of the main task 1 program, task_args is pointing to an initialized mars_task_args structure. This structure contains the unique value specified by the main task 1 program.

|

| Lines:6-8 | Print out message to stdout. Print out the unique value specified by the main task 1 program. This value should be unique for each sub task program.

|

9.3 Task Barrier Usage

This tutorial will explain how to use the MARS task barrier to synchronize execution between multiple MARS tasks.The sample code creates a task barrier and 10 task instances of a task program. Each task program must do several iterations of some pre-processing work and some post-processing work. For each iteration, all tasks must complete the pre-processing work before any tasks can continue to do the post-processing work. In order to synchronize the tasks to accomplish this, a task barrier will be used. After finishing the pre-processing and before starting the post-processing work, the tasks will notify arrival to the barrier. Once all tasks notify the barrier and the barrier is released, all tasks can proceed to finish the post-processing work.

(host program)

1 #include <stdio.h> 2 #include <mars/task.h> 3 4 #define NUM_TASKS 10 5 6 static void *task_program_elf_image; 7 8 int main(void) 9 { 10 struct mars_context *mars_ctx; 11 struct mars_task_id task_id[NUM_TASKS]; 12 struct mars_task_args task_args; 13 uint64_t barrier_ea; 14 int i; 15 16 mars_context_create(&mars_ctx, 0, 0); 17 18 mars_task_barrier_create(mars_ctx, &barrier_ea, NUM_TASKS); 19 20 for (i = 0; i < NUM_TASKS; i++) { 21 char name[16]; 22 sprintf(name, "Task %d", i); 23 24 mars_task_create(mars_ctx, &task_id[i], name, task_program_elf_image, MARS_TASK_CONTEXT_SAVE_SIZE_MAX); 25 26 task_args.type.u64[0] = barrier_ea; 27 mars_task_schedule(&task_id[i], &task_args, 0); 28 } 29 30 for (i = 0; i < NUM_TASKS; i++) { 31 mars_task_wait(&task_id[i], NULL); 32 mars_task_destroy(&task_id[i]); 33 } 34 35 mars_task_barrier_destroy(barrier_ea); 36 37 mars_context_destroy(mars_ctx); 38 39 return 0; 40 }

| Line:11 | Declare an array of 10 task ids for each instance of the task program we plan to create and schedule.

|

| Line:13 | Declare an instance of the task barrier ea.

|

| Line:18 | Create the task barrier instance. int mars_task_barrier_create ( arg1: Pass in the MARS context pointer. arg2: Pass in the address to the barrier ea we declared at Line:13. arg3: Pass in the total number of task notifications to wait for before the barrier is released. return: MARS_SUCCESS is returned on success and a negative error value otherwise. )

|

| Line:24 | Create each of the 10 task instances. Specify the task program ELF image for these task instances and a context save area size of MARS_TASK_CONTEXT_SAVE_SIZE_MAX to allow these tasks to context switch.

|

| Lines:26-27 | Initialize the task args we want passed into the task program's mars_task_main function. Store the barrier ea in the task args. Schedule the task instance for execution, passing in the task args.

|

| Lines:30-37 | Wait for completion and destroy all 10 task instances. Finally destroy the barrier instance and the MARS context.

|

(task program)

1 #include <stdio.h> 2 #include <mars/task.h> 3 4 #define ITERATIONS 3 5 6 int mars_task_main(const struct mars_task_args *task_args) 7 { 8 int i; 9 uint64_t barrier_ea = task_args->type.u64[0]; 10 11 for (i = 0; i < ITERATIONS; i++) { 12 pre_barrier_process(); 13 14 mars_task_barrier_notify(barrier_ea); 15 mars_task_barrier_wait(barrier_ea); 16 17 post_barrier_process(); 18 } 19 20 return 0; 21 }

| Line:6 | Since the task args were passed into mars_task_schedule at Line:27 of the host program, task_args is pointing to an initialized mars_task_args structure.

|

| Line:9 | Grab the ea of the barrier initialized in the host program from the task arg structure.

|

| Line:11 | Do several iterations of processing with the task. Each iteration of processing will be synchronized by the barrier.

|

| Line:12 | Do some pre barrier processing. For this sample assume it processes some dummy work.

|

| Line:14 | Notify the barrier that we have arrived at the synchronization point. int mars_task_barrier_notify ( arg1: Pass in the ea of the barrier. return: MARS_SUCCESS is returned on success and a negative error value otherwise. )

|

| Line:15 | Wait for the barrier to be released. int mars_task_barrier_wait ( arg1: Pass in the ea of the barrier. return: MARS_SUCCESS is returned on success and a negative error value otherwise. ) If the barrier has not been released by the time of this call, this means the other tasks have not yet finished the pre barrier processing and notified the barrier yet. If this is the case, this task will enter a wait state and its context will be switched out. When all tasks notify the barrier and the barrier is released, this task will resume execution and continue.

|

| Line:17 | Do some post barrier processing. For this sample assume it processes some dummy work.

|

9.4 Task Event Flag Usage

This tutorial will explain how to use the MARS task event flag to synchronize execution between the host program and MARS tasks.This sample code creates 2 task instances for task 1 program and task 2 program and creates 3 event flags.

The first event flag is used to synchronize between the host program and task 1. Task 1 can only begin processing after the host program has waited 1 second and sets the event flag for task 1 to begin.

The second event flag is used to synchronize between the 2 tasks. Task 2 can only begin processing after task 1 has completed its processing and sets the event flag for task 2 to begin.

The third event flag is used to synchronize between task 2 and the host program. The host program waits until task 2 has completed its processing and sets the event flag for the host program to continue and finish execution.

(host program)

1 #include <unistd.h> 2 #include <mars/task.h> 3 4 static void *task1_program_elf_image; 5 static void *task2_program_elf_image; 6 7 int main(void) 8 { 9 struct mars_context *mars_ctx; 10 struct mars_task_id task1_id; 11 struct mars_task_id task2_id; 12 struct mars_task_args task_args; 13 uint64_t host_to_mpu_ea; 14 uint64_t mpu_to_host_ea; 15 uint64_t mpu_to_mpu_ea; 16 17 mars_context_create(&mars_ctx, 0, 0); 18 19 mars_task_event_flag_create(mars_ctx, &host_to_mpu_ea, 20 MARS_TASK_EVENT_FLAG_HOST_TO_MPU, 21 MARS_TASK_EVENT_FLAG_CLEAR_AUTO); 22 23 mars_task_event_flag_create(mars_ctx, &mpu_to_host_ea, 24 MARS_TASK_EVENT_FLAG_MPU_TO_HOST, 25 MARS_TASK_EVENT_FLAG_CLEAR_AUTO); 26 27 mars_task_event_flag_create(mars_ctx, &mpu_to_mpu_ea, 28 MARS_TASK_EVENT_FLAG_MPU_TO_MPU, 29 MARS_TASK_EVENT_FLAG_CLEAR_AUTO); 30 31 mars_task_create(mars_ctx, &task1_id, "Task 1", task1_program_elf_image, MARS_TASK_CONTEXT_SAVE_SIZE_MAX); 32 mars_task_create(mars_ctx, &task2_id, "Task 2", task2_program_elf_image, MARS_TASK_CONTEXT_SAVE_SIZE_MAX); 33 34 task_args.type.u64[0] = host_to_mpu_ea; 35 task_args.type.u64[1] = mpu_to_mpu_ea; 36 mars_task_schedule(&task1_id, &task_args, 0); 37 38 task_args.type.u64[0] = mpu_to_mpu_ea; 39 task_args.type.u64[1] = mpu_to_host_ea; 40 mars_task_schedule(&task2_id, &task_args, 0); 41 42 sleep(1); 43 44 mars_task_event_flag_set(host_to_mpu_ea, 0x1); 45 mars_task_event_flag_wait(mpu_to_host_ea, 0x1, MARS_TASK_EVENT_FLAG_MASK_AND, NULL); 46 47 mars_task_wait(&task1_id, NULL); 48 mars_task_wait(&task2_id, NULL); 49 50 mars_task_destroy(&task1_id); 51 mars_task_destroy(&task2_id); 52 53 mars_task_event_flag_destroy(host_to_mpu_ea); 54 mars_task_event_flag_destroy(mpu_to_host_ea); 55 mars_task_event_flag_destroy(mpu_to_mpu_ea); 56 57 mars_context_destroy(mars_ctx); 58 59 return 0; 60 }

| Lines:13-15 | Declare 3 instances of the task event flag structure we plan to create.

|

| Lines:19-29 | Create the 3 task event flag instances. int mars_task_event_flag_create ( arg1: Pass in the MARS context pointer. arg2: Pass in the address of the event flag ea we declared at Lines:13-15. arg3: Pass in the direction of events for each instance. The direction must be MARS_TASK_EVENT_FLAG_HOST_TO_MPU, MARS_TASK_EVENT_FLAG_MPU_TO_HOST, or MARS_TASK_EVENT_FLAG_MPU_TO_MPU. arg4: Pass in the clear mode for each instance. Specify MARS_TASK_EVENT_FLAG_CLEAR_AUTO so the event flag bit is automatically cleared when the first task waiting on the event receives the event. To specify not clearing the event bits automatically so that the event flag bits are set until some task manually clears it, specify MARS_TASK_EVENT_FLAG_CLEAR_MANUAL. return: MARS_SUCCESS is returned on success and a negative error value otherwise. ) The first event flag is created for host program to task program events. The second event flag is created for task program to host program events. The third event flag is created for task program to task program events.

|

| Lines:31-32 | Create the task instance for both the task 1 program and task 2 program. Specify a context save area size of MARS_TASK_CONTEXT_SAVE_SIZE_MAX to allow these tasks to context switch.

|

| Lines:34-36 | Initialize the task args we want passed into task 1 program's mars_task_main function. Store the event flag ea for both host to mpu and mpu to mpu communication. These event flags will be used to receive events from the host program and also to send events to task 2 program. Schedule the task instance for execution, passing in the task args.

|

| Lines:38-40 | Initialize the task args we want passed into task 2 program's mars_task_main function. Store the event flag ea for both mpu to mpu and mpu to host communication. These event flags will be used to receive events from the task 1 program and also to send events to the host program. Schedule the task instance for execution, passing in the task args.

|

| Line:42 | Sleep for 1 second before continuing. This allows enough time for the tasks to be scheduled and begin execution. This is only to demonstrate the task entering the wait state when waiting for a specific event.

|

| Line:44 | Set the event that task 1 is waiting for to allow task 1 to continue execution. int mars_task_event_flag_set ( arg1: Pass in the pointer to the event flag instance we created for host to MPU communication. arg2: Pass in the value specifying which bits to set in the event flag. These bits are logically OR'ed with the bits already set in the event flag. return: MARS_SUCCESS is returned on success and a negative error value otherwise. )

|