...making Linux just a little more fun!

November 2010 (#180):

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Away Mission - ApacheCon, QCon, ZendCon and LISA, by Howard Dyckoff

- Enemy Action, by Henry Grebler

- House, Lies and Sysadmin, by Henry Grebler

- Installing/Configuring/Caching Django on your Linux server, by Anderson Silva and Steve 'Ashcrow' Milner

- Ten Tips to Harden Your Website, by Sander Temme

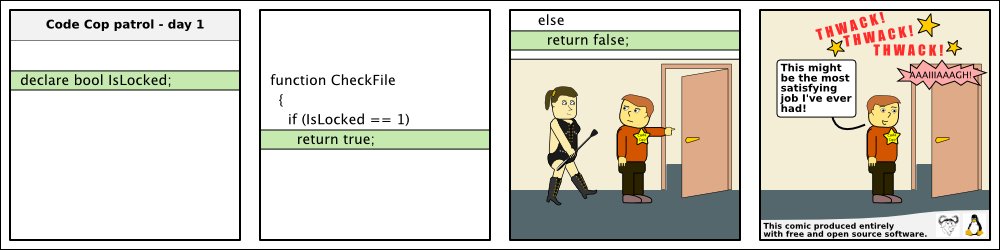

- HelpDex, by Shane Collinge

- Ecol, by Javier Malonda

- XKCD, by Randall Munroe

- Doomed to Obscurity, by Pete Trbovich

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net. Deividson can also be reached via twitter.

News in General

Linux Foundation User Survey Shows Enterprise Gains

Linux Foundation User Survey Shows Enterprise Gains

At its recent End User Summit, the Linux Foundation published "Linux

Adoption Trends: A Survey of Enterprise End Users," which shares new

data that shows Linux is poised to take significant market share from

Unix and especially Windows while becoming more mission critical and

more strategic in the enterprise.

The data in the report reflects the results of an invitation-only

survey of The Linux Foundation's Enterprise End User Council as well

as other companies and government organizations. The survey was

conducted by The Linux Foundation in partnership with Yeoman

Technology Group during August and September 2010 with responses from

more than 1900 individuals.

This report filters data on the world's largest enterprise companies

and government organizations - identified by 387 respondents at

organizations with $500 million or more a year in revenues or greater

than 500 employees.

The data shows that Linux vendors are poised for growth in the years

to come as large Linux users plan to both deploy more Linux relative

to other operating systems and to use the OS for more mission critical

workloads than ever before. Linux is also becoming the preferred

platform for new or "greenfield" deployments, representing a major

shift in user patterns as IT managers break away from legacy systems.

Key findings from the Linux adoption report:

* 79.4 percent of companies are adding more Linux relative to

other operating systems in the next five years.

* More people are reporting that their Linux deployments are

migrations from Windows than any other platform, including Unix

migrations. 66 percent of users surveyed say that their Linux

deployments are brand new ("Greenfield") deployments.

* Among the early adopters who are operating in cloud

environments, 70.3 percent use Linux as their primary platform, while

only 18.3 percent use Windows.

* 60.2 percent of respondents say they will use Linux for more

mission-critical workloads over the next 12 months.

* 86.5 percent of respondents report that Linux is improving and

58.4 percent say their CIOs see Linux as more strategic to the

organization as compared to three years ago.

* Drivers for Linux adoption extend beyond cost - technical

superiority is the primary driver, followed by cost and then security.

The growth in Linux, as demonstrated by this report, is leading

companies to increasingly seek Linux IT professionals, with 38.3

percent of respondents citing a lack of Linux talent as one of their

main concerns related to the platform.

Users participate in Linux development in three primary ways: testing

and submitting bugs (37.5%), working with vendors (30.7%) and

participating in The Linux Foundation activities (26.0%).

"The IT professionals we surveyed for the Linux Adoption Trends report

are among the world's most advanced Linux users working at the largest

enterprise companies and illustrate what we can expect from the market

in the months and years ahead," said Amanda McPherson, vice president

of marketing and developer programs at The Linux Foundation.

To download the full report, please visit:

http://www.linuxfoundation.org/lp/page/download-the-free-linux-adoption-trends-report.

Conferences and Events

- Linux Kernel Summit

-

November 1-2, 2010. Hyatt Regency Cambridge, Cambridge, MA

http://events.linuxfoundation.org/events/linux-kernel-summit.

- ApacheCon North America 2010

-

1-5 November 2010, Westin Peachtree, Atlanta GA

The theme of the ASF's official user conference, trainings, and expo is

"Servers, The Cloud, and Innovation," featuring an array of educational

sessions on open source technology, business, and community topics at the

beginner, intermediate, and advanced levels.

Experts will share professionally directed advice, tactics, and lessons learned

to help users, enthusiasts, software architects, administrators, executives,

and community managers successfully develop, deploy, and leverage existing and

emerging Open Source technologies critical to their businesses.

ApacheCon North America 2010

- QCon San Francisco 2010

-

November 1-5, Westin Hotel, San Francisco CA

http://qconsf.com/.

- ZendCon 2010

-

November 1-4, Convention Center, Santa Clara, CA

http://www.zendcon.com/.

- 9th International Semantic Web Conference (ISWC 2010)

-

November 7-11, Convention Center, Shanghai, China

http://iswc2010.semanticweb.org/.

- LISA '10 - Large Installation System Administration Conference

-

November 7-12, San Jose, CA

http://usenix.com/events/.

- ARM Technology Conference

-

November 9-11, Convention Center, Santa Clara, CA

http://www.arm.com/about/events/12129.php.

- Sencha Web App Conference 2010

-

November 14-17, Fairmont Hotel, San Francisco CA

http://www.sencha.com/conference/.

- Agile Development Practices East

-

November 14-19, Rosen Centre, Orlando, FL

http://www.sqe.com/AgileDevPracticesEast/.

- Devox 2010 (Java Dev Conference)

-

November 15-19, Metropolis, Antwerp, Belgium

http://reg.devoxx.com/.

- Semantic Web Summit 2010

-

November 16-17, Hynes Center, Boston, MA

Discount Code: SEMTECH

http://www.semanticwebsummit.com/.

- DreamForce 2010 / SalesForce Expo

-

December 6-9, Moscone Center, San Francisco

http://www.salesforce.com/dreamforce/DF10/home/.

- CARTES & IDentification 2010

-

December 7-9, Nord Centre, Paris, Fance

[Use code VCGR01 for 20% off or free expo pass]

http://www.cartes.com/ExposiumCms/do/exhibition/.

Distro News

Ubuntu 10.10 Released

Ubuntu 10.10 Released

The latest Ubuntu version, 10.10, was released on 10:10:10 UCT on Oct

10th.

This release is focused on home and mobile computing users, and

introduces an array of online and offline applications to Ubuntu

Desktop edition with a particular focus on the personal cloud.

Canonical has also launched the 'Ubuntu Server on Cloud 10' program

which allows one to try out Ubuntu 10.10 Server Edition on Amazon EC2 for free for 42

minutes. Visitors to the download pages will now be able to experience

the speed of public cloud computing and Ubuntu. For a direct link to

the trial, please go to: http://10.cloud.ubuntu.com.

Ubuntu 10.10 will be supported for 18 months on desktops, netbooks,

and servers.

Ubuntu Netbook Edition users can experience a new desktop interface

called 'Unity' -- specifically tuned for smaller screens and computing

on the move. Unity includes a brand-new Ubuntu font family, a

redesigned system installer, the latest GNOME 2.32 desktop, Shotwell

as the new default photo manager, plus other new features.

Find more information on Ubuntu 10.10 here:

http://www.ubuntu.com/getubuntu/releasenotes/1010overview.

Puppy Linux 5.1 released

Puppy Linux 5.1 released

The new Puppy Linux 5.1, code-name "Lucid" as it is binary-compatible

with Ubuntu Lucid Lynx packages, has been released. Lucid Puppy 5.1 is

a "full-featured compact distro."

As a Puppy distro, it is fast, friendly, and it can serve as a main

Linux desktop. Quickpet and Puppy Package Manager allow easy

installation of many Linux programs, tested and configured for Lucid

Puppy.

The Puppy UI looks better because the core Lucid team now includes an

art director. The changes relative to Puppy 5.0 has been to improve

the user interface, both to make it more friendly and to enhance the

visual appearance.

Lucid Puppy boots directly to an automatically configured graphical

desktop, with the tools to personalize the desktop and recommends

which add-on video driver to use for high-performance graphics.

Download Puppy 5.1 from http://www.puppylinux.com/download/.

Ubuntu Rescue Remix 10.10 Released

Ubuntu Rescue Remix 10.10 Released

Version 10.10 (Maverick Meerkat) of the data recovery software toolkit

based on Ubuntu is out. This is an Ubuntu-based live medium which

provides the data recovery specialist with a command-line interface to

current versions of the most powerful open-source data recovery

software including GNU ddrescue, Photorec, The Sleuth Kit and

Gnu-fdisk. A more complete list is available here:

http://ubuntu-rescue-remix.org/Software.

The live Remix provides a full shell environment and can be downloaded

here: http://ubuntu-rescue-remix.org/files/URR/iso/ubuntu-rescue-remix-10-10.iso.

This iso image is also compatible with the USB Startup Disk Creator

that has included with Ubuntu since 9.04. PendriveLinux also provides

an excellent way for Windows users to put Ubuntu-Rescue-Remix on a

stick.

The live environment has a very low memory requirement due to not

having a graphical interface (command-line only). If you prefer to

work in a graphical environment, a metapackage is available which will

install the data recovery and forensics toolkit onto your current

Ubuntu Desktop system.

The toolkit can also be installed on a live USB Ubuntu Desktop with

persistent data. To do so, add the following archive to your software

channels:

deb http://ppa.launchpad.net/arzajac/ppa/ubuntu lucid main

Then authenticate this software source by running the following

command:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys BDFD6D77

Then, install the "ubuntu-rescue-remix-tools" package.

Software and Product News

Novell Delivers SUSE Linux Enterprise Server for VMware

Novell Delivers SUSE Linux Enterprise Server for VMware

VMware and Novell have announced the general availability of SUSE

Linux Enterprise Server for VMware, the first step in the companies'

expanded partnership announced in June of 2010. The solution is

designed to reduce IT complexity and accelerate the customer evolution

to a fully virtualized datacenter.

With SUSE Linux Enterprise Server for VMware, customers who purchase a

VMware vSphere license and subscription also receive a subscription

for patches and updates to SUSE Linux Enterprise Server for VMware at

no additional cost. Additionally, VMware will offer the option to

purchase technical support services for SUSE Linux Enterprise Server

for VMware for a seamless support experience available directly and

through its network of solution provider partners. This unique

solution benefits customers by reducing the cost and complexity of

deploying and maintaining an enterprise operating system running on

VMware vSphere.

With SUSE Linux Enterprise Server for VMware. both companies intend to

provide customers the ability to port their SUSE Linux-based workloads

across clouds. Such portability will deliver choice and flexibility

for VMware vSphere customers and is a significant step forward in

delivering the benefits of seamless cloud computing.

"This unique partnership gives VMware and Novell customers a

simplified and lower-cost way to virtualize and manage their IT

environments, from the data center to fully virtualized datacenters,"

said Joe Wagner, senior vice president and general manager, Global

Alliances, Novell. "SUSE Linux Enterprise Server for VMware is the

logical choice for VMware customers deploying and managing Linux

within their enterprise. This agreement is also a strong validation of

Novell's strategy to lead in the intelligent workload management

market."

"VMware vSphere delivers unique capabilities, performance and

reliability that enable our customers to virtualize even the most

demanding and mission-critical applications," said Raghu Raghuram,

senior vice president and general manager, Virtualization and Cloud

Platforms, VMware. "With SUSE Linux Enterprise Server for VMware. we

provide customers a proven enterprise Linux operating platform with

subscription to patches and updates at no additional cost, improving

their ability to complete the transformation of their data center into

a private cloud while further increasing their return on investment."

Kanguru Hardware Encrypted USB Flash Drives for Linux

Kanguru Hardware Encrypted USB Flash Drives for Linux

Kanguru Solutions has added Mac and Linux to the list of compatible

platforms for its Kanguru Defender Elite, manageable, secure flash

drives.

The Kanguru Defender Elite offers security features including: 256-bit

AES Hardware Encryption, Remote Management Capabilities, On-board

Anti-Virus, Tamper Resistant Design, and FIPS 140-2 Certification. The

addition of GUI support for Mac and Linux opens Kanguru's secure flash

drives to a much wider audience, allowing Mac and Linux users to

benefit from its industry leading security features.

"Our goal has been to develop the most comprehensive set of security

features ever built into a USB thumb drive." said Nate Cote, VP of

Product Management. "And part of that includes making them work across

various platforms. Encryption and remote management are only useful if

they work within your organizations' IT infrastructure."

The Kanguru Defender Elite currently works with:

* Red Hat 5

* Ubuntu 10

* Mac 10.5 and above

* Windows XP and above

Additional compatibility is in development for several other Linux

distributions and connectivity options.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Away Mission - ApacheCon, QCon, ZendCon and LISA

By Howard Dyckoff

Away Mission - ApacheCon, QCon, ZendCon and LISA

This November has a surfeit of superior conferences, most actually running

in the first week. The conferences listed in the title all start on Nov

1st which might force you to make some hard choices.

I'll be putting notes on ApacheCon after ZendCon and QCon, but not out

of a preference; instead, it's simply in order of shortest commentary to

longest. These are all great events and we could even include the Linux

Kernel Summit, which is also the first week of November, but we haven't

attended one before.

LISA

Unlike the conferences reviewed below, LISA - the Large Installation System

Administrator conference organized by USENIX - is during the second week of

November and has few conflicts with other events. This is an event with a

long history, dating back to the '80s and predating the Internet boom.

Indeed, this is the 24th LISA conference. The focus nowadays is on the

backends for companies like Google, Yahoo, and Facebook.

I haven't attended a LISA conference since I was a sysadmin, before the

beginning of the millennium, but I fondly remember having an opportunity to

talk with other sysadmins who were working on the bleeding edge of data

center operations. This is the first LISA actually in the San Francisco

area, even though USENIX is headquartered in Berkeley. Most West Coast

LISA events have been held in San Diego.

Presentations at LISA cover a variety of topics, e.g. IPv6 and ZFS, and

training includes Linux Security, Virtualization, and cfEngine among

others. Topics covered by the invited presenters include:

- "10,000,000,000 Files Available Anywhere: NFS at Dreamworks," by Sean

Kamath and Mike Cutler, PDI/Dreamworks

- "Operations at Twitter: Scaling Beyond 100 Million Users," by John

Adams, Twitter

You can find online proceedings from LISA going back to 1993 here.

ZendCon 2010

The 6th Annual Zend/PHP Conference will bring together PHP developers and

IT staff from around the world to discuss PHP best practices. This year it

will be held at the Santa Clara Convention Center which has lots of free

parking and is convenient to Silicon Valley and the San Jose airport. It

starts on November 1st and - unfortunately - conflicts with QCon in San

Francisco and ApacheCon in Atlanta.

This used to be a vest-pocket conference at the Hyatt near the San

Francisco airport and was much more accessible from that city. There was a

charm in its smallness and its tight focus and it brought the PHP faithful

together with the Zend user community.

This year, ZendCon will host technical sessions in 9 tracks plus have

in-depth tutorials and an enlarged Exhibit Hall (with IBM, Oracle and

Microsoft as major sponsors). A variety of tracks - SQL, NoSQL,

architecture, lifecycle, and server operations - are available. This is an

opportunity to learn PHP best practices in many areas.

There will also be an unconference running the 2nd and 3rd days of

ZendCon, featuring both 50 minute sessions and 20 minute lightning talks.

There is also a CloudCamp unconference on the evening of the tutorial day

where early adopters of Cloud Computing technologies exchange ideas. And

the 3 days of regular conferencing, without tutorials, is only a modest

$1100 before it starts. Not a bad deal either way.

If you want to look for the slides from ZendCon 2009, they can be found here; to listen to the audio

recordings being released as a podcast, take a look here.

For full 2010 conference info, visit

http://www.zendcon.com/.

QCon 2010

QCon is a personal favorite of mine because of its breadth and its

European roots. The mix of advanced Agile discussions with Java and Ruby

programming and new database technology is intoxicating. Its also a place

to see and hear something not repeated at other US conferences.

This year, tracks include Parallel Programming, the Cloud, Architectures

You Never Heard Of, Java, REST, Agile process, and NoSQL. Check out the

tracks and descriptions here.

Last year, the Agile track (and the tutorials) included several sessions on

Kanban as form of 'Lean' and 'Agile' development methodology. Kanban is

derived from the signaling used to control railroad traffic and uses

token-passing to optimize queues and flows. The idea is to do the right

amount of work to feed the next step in the process. An example of a Kanban

work flow can be seen in the anime feature "Spirited Away" by Hayao

Miyazaki, where a ghostly bath house regulates the use of hot water by

passing out use tokens. That way the capacity of the system is never

exceeded.

To get info on last year's event, including videos of Martin Fowler and

Kent Beck speaking, visit this link.

ApacheCon 2010

I was pleasantly surprised to find this 'Con so close to home since it is

held in Boston half the time. The Oakland Convention Center is an

underutilized gem in the redeveloped Oakland downtown, very close to the

regional Bay Area Rapid Transit station and many fine eateries in Oakland's

burgeoning new cuisine district. And the weather is warmer than in San

Francisco.

ApacheCon spanned an entire week in 2009. The training period started the

week with 2 days of half day classes. This was paralleled by 2 days of Bar

Camp - the Unconference that is `included in ApacheCon' - as well as a

Hackathon, which in this case was a kind of code camp for project

submitters.

This was the first year that the Bar Camp and Hackathon paralleled

the training days. Since these two programs were 'free' as in beer, it

afforded a chance for many local developers to get involved; it also drew

several folk who had never been to ApacheCon. It was also the 10th

anniversary of the Apache Software Foundation and there was a celebration

during the conference.

Sessions were 50 min long and spanned 4 consecutive tracks. The track

content varied over the 3 days of ApacheCon and included many prominent

Apache projects. Some presentations were a bit dry and concerned with the

details and philosophy of ongoing projects. Some were report cards on the

progress of joint efforts with industry and academia.

I attended sessions on Tomcat and Geronimo as well as Axis and other

Apache projects. These were very detailed and informative.

The Lightning Talks are short sessions held the last night of the

conference. This ApacheCon variant is up to 5 minutes, on anything you

want. The limited rules state "No Slides and No Bullets" (as there would be

in presentations.) They provide the beer and wine. And they encourage

recitals - its supposed to be fun... And they were.

The closing keynote was by Brian Behlendorf, titled "How Open Source

Developers Can (Still!) Save The World". Behlendorf was the primary

developer of the Apache Web server and a founding member of the Apache

Group and is currently a Director of CollabNet, the major sponsor of

Subversion and a company he co-founded with O'Reilly & Associates.

He spoke about the important contribution developers can make to

non-profits and noted that he is on the board of Bentech, which provides

the Martus encryption tools to the human rights and social justice sector

to assist in the collection, safeguarding, organization, and dissemination

of information about human rights violations. Martus Server Software

accepts encrypted bulletins, securely backs them up and replicates them to

multiple locations, safeguarding the information from loss. The software

was used in the Bosnia war crimes trials.

Among other non-profit development projects, Behlendorf mentioned PloneGov.org which produces modules for

Plone to implement common services (like a city meeting template). The aim

is to make government more transparent and more efficient.

Behlendorf also mentioned Sahana, a part of Sri Lankan Apache Community

that was started after 2004 Tsunami to help relocated survivors. This

effort has now resulted in a disaster relief management package used in 20

cities. The UN is starting to use it as this was a space neglected by

commercial software corporations.

His summary points: we geeks have skills that are worth more than just

dollars and hours to non-profit organizations. So find a project and help

out, even non-code contributions can matter.

This year's ApacheCon will be held in Atlanta starting on November 1st.

Sessions will feature tracks on Cassandra/NoSQL, Content Technologies,

(Java) Enterprise Development, Geronimo, Felix/OSGi, Hadoop + Cloud

Computing, Tomcat, Tuscany, Commons, Lucene, Mahout + Search, Business

& Community. Free events include a 2-day BarCamp and the evening

MeetUps. For more info, visit http://na.apachecon.com/c/acna2010/.

Lunches were boxed on most conference days, but the last day had hot

pizza and pasta with great desserts so it was worth lasting out the

conference. Also, all the vendor swag was piled on a table for the taking

on the last afternoon - so with ApacheCon, patience is a virtue.

The wiki for ApacheCon has some links for presentation slides, but not

after the European ApacheCon that preceded the North America ApacheCon in

2009. See here.

SalesForce, DreamForce

If you are interested in Cloud Computing, consider the user conference for

SalesForce.com, the annual DreamForce

event in San Francisco. It was a November event in 2009 but will happen on

December 6-9 this year. There are usually a few open source vendors at the

DreamForce expo.

[ The Away Mission Column will be on holiday leave next month. ]

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2010, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

Enemy Action

By Henry Grebler

"Once is happenstance. Twice is coincidence. Three times is

enemy action."

- Ian Fleming (spoken by Auric Goldfinger)

Once

Sometimes I buy tickets on the Internet. About a month ago, I bought

tickets to the footy.

There are several options, but I choose to get the tickets emailed to

me as a PDF. I have no idea what research the ticketing agencies do,

but they obviously do not include me in the profile of their typical

user.

I planned to go with a friend, Jeremy, who supports the opposition

team. Jeremy is in paid employment, whereas I am currently "between

engagements". He intended to ride his bicycle to the stadium; I would

take the train. I agreed to meet Jeremy inside the stadium.

But I've still got to get him his ticket.

Sometimes the tickets come as separate PDF files, but this time they

were both in a single PDF. I struggled mindlessly for some time and

eventually sent Jeremy a 6.5 MB .ppm! Fortunately, it gzipped very

nicely and the email was less than 350 KB.

Twice

Recently, I bought more tickets for some future footy game. Again,

they came as a single PDF. This time it was Mark and Matt to whom I

wanted to send tickets.

I went to my help directory to see what I had done the previous time.

Nothing. As disciplined as I try to be, I remain disappointingly

human.

Never mind. I've had lots of time living with myself. I now know there

are two of me. I've learnt to put up with the hopeless deficiencies of

the Bad Henry. After all, what can you do?

So, I worked out (again!) how to split out pages from a PDF. Between

the first and second time, I'd been on a ten-day trip to Adelaide.

Before I leave home on long trips I shut down my computer. And my

brain.

My solution this time involved PostScript files. I wrote it up (Good

Henry was in charge). Then, for completeness (Good Henry can be quite

anal), I reconstructed what I probably did the first time and wrote that up

also.

Three times

Today it happened again. Last night I booked tickets for the theatre.

(You see? There's more to me than just football.) When I looked at

the tickets, I found a single PDF containing 4 tickets: two for me and

my wife, two for Peter and his wife, Barbara.

And finally I hit the roof. I'd tried Adobe Acrobat,

xpdf, and evince. They all have the same problem:

they insist on honouring the stupid restrictions included in the PDF,

things like "no copying".

Encrypted: yes (print:yes copy:no change:no addNotes:no)

Think about it. How stupid is this? If I have x.pdf,

no matter what some cretin includes in the file, nothing can stop me

from going:

cp x.pdf x2.pdf

So what has been achieved by the restriction? The words of the song

about the benefits of war come to mind: absolutely nothing! And I

am tempted to say it again: absolutely nothing.

[ These days, there are so many ways to remove/bypass/eliminate/ignore that

so-called "protection" - e.g., ensode.net's online "unlocker" -

that it is indeed meaningless. -- Ben ]

So let's say I send Jeremy or Mark or Matt a copy of the entire PDF.

What I'm concerned about is that they may inadvertently print the

wrong ticket. Sure, in the email, I tell each person which ticket is

his. But... since each ticket has a barcode, perhaps the stadium

checks and complains about a second attempt to present the same

ticket. Embarrassment all round.

Notice that I'm not trying to do anything illegal or underhanded. I'm

not trying to violate some cretin's digital rights. I'm not trying to

harm anyone. I'm trying to get a ticket to my friend; a ticket that I

bought legitimately; one that I'm not only entitled, but required, to

print out. I guess they want me to fax it to him. But isn't that also

making a copy? Do they expect me to drive across town to get him his

ticket?

It seems to me amazing that I'm the only one who wants this

capability. Every time I've looked on my computer (apropos)

or anywhere else (Internet), I've never found a convenient mechanism.

I now have a mechanism (see next section), but this is really a plea

for help. Can anyone suggest something a little more elegant than what

I've come up with?

2-Cent Tip

Here is the best method I could come up with for extracting one page,

or several contiguous pages, from a PDF into a separate PDF:

gs -dSAFER -sDEVICE=pdfwrite -dNOPAUSE -dBATCH \

-dFirstPage=3 -dLastPage=4 \

-sOutputFile=PeterAndBarbara.pdf Tickets.pdf

Basically this command says to extract pages 3 and 4 from Tickets.pdf

and put them into PeterAndBarbara.pdf. The first line consists mainly

of window dressing.

If you want to extract each page as a separate PDF file (not a bad

idea), here's a script:

#! /bin/sh

# pdfsplit.sh - extract each page of a PDF into a separate file

myname=`basename $0`

#----------------------------------------------------------------------#

Usage () {

cat <<EOF

Usage: $myname file

where file is the PDF to split

Output goes to /tmp/page_n.pdf

EOF

exit

}

#----------------------------------------------------------------------#

Die () {

echo $myname: $*

exit

}

#----------------------------------------------------------------------#

[ $# -eq 1 ] || Usage

pages=`pdfinfo $1 | grep Pages | awk '{print $2}'`

[ "$pages" = '' ] && Die "No pages found. Perhaps $1 is not a pdf."

[ "$pages" -eq 1 ] && Die "Only 1 page in $1. Nothing to do."

jj=1

while [ $jj -le $pages ]

do

gs -dSAFER -sDEVICE=pdfwrite -dNOPAUSE -dBATCH \

-dFirstPage=$jj -dLastPage=$jj \

-sOutputFile=/tmp/page_$jj.pdf $1

jj=`expr $jj + 1`

done

Notes

You can look at gs(1) for a limited amount of help. For more

useful help, do this:

gs -h

and look at the second last line. Mine says:

For more information, see /usr/share/ghostscript/8.15/doc/Use.htm.

I've probably broken my system, because it is actually in

/usr/share/doc/ghostscript-8.15/Use.htm. Another

system refers correctly to

/usr/local/share/ghostscript/8.64/doc/Use.htm.

You can use your usual browser or you can use:

lynx /usr/share/doc/ghostscript-8.15/Use.htm

See also pdfinfo(1). Here's an example of its output:

pdfinfo Tickets.pdf

Producer: Acrobat Distiller 7.0.5 (Windows)

CreationDate: Tue Mar 16 04:21:58 2010

ModDate: Tue Mar 16 04:21:58 2010

Tagged: no

Pages: 4

Encrypted: yes (print:yes copy:yes change:no addNotes:no)

Page size: 595 x 842 pts (A4)

File size: 1290313 bytes

Optimized: no

PDF version: 1.4

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

House, Lies and Sysadmin

By Henry Grebler

In the TV show House, the eponymous protagonist often repeats the idea

that patients lie.

"I don't ask why patients lie, I just assume they all do."

If you're in support, you could do worse than transpose that dictum to

your users. Of course, I'm using "lie" very loosely. Often the user

does not set out to deceive; and yet, sometimes, I can't escape the

feeling that they could not have done a better job of deception had

they tried.

Take a case that occurred recently. A user wrote to me. I'll call him

Gordon.

Hi Henry,

I'm embarrassed to say that I seem to have lost (presumably

deleted) my record of email for the AQERA association while

re-organizing email folders earlier this year.

The folder was called Assoc/AQERA, and I deleted it around May

18 of this year. Would it be possible to recover the folder

from a time point shortly before that date?

Thanks a lot

Gordon

This was written in early September. That's Gordon's first bit of bad

luck. We keep online indexes for 3 months. Do the math. A couple of

weeks earlier, and I might have got back his emails with little

trouble - and this article would never have been born.

Hi Gordon,

The difficult we do today. The impossible takes a little

longer.

Your request falls into the second category.

Cheers,

Henry

Some customers have unrealistic expectations. Gordon is remarkably

understanding:

Err, does that mean not possible, or that it will take a

while?

I understand it might not be not recoverable, and it's my own

fault anyway, but I need to know.

Who knows what might have happened under normal circumstances? In this

case, there were some unusual elements. I'd started work at this

organisation May 10. Less than 2 weeks later, our mail server had

crashed. When we rebooted, one of the disk drives showed

inconsistencies which an fsck was not able to resolve (long story). We

had to go back to tapes and recover to a different disk on a different

machine and then serve the mail back to the first machine over NFS.

The disk drive which had experienced the inconsistencies was still

attached to the mail server. I would often mount it read-only to check

something or other. In this case, I checked to see if the emails he

wanted were on that drive. They weren't. The folder wasn't there.

There were other folders in the Assoc/AQERA family (Assoc/AQERA-2010,

Assoc/AQERA-20009, etc). But no Assoc/AQERA.

There was something else that was fishy. I can't remember the exact

date of the mail server crash. Let's say it was May 24. When we

recovered from backup, we would have taken the last full backup from

the first week in May. In other words, we would have restored his

account back to how it was before May 18. Without meaning to, we would

have "undeleted" the deleted folder his email claimed.

For many reasons, after the email system was restored, users did not

get access to their historical emails until early June.

So something he's telling me is misleading. Either he deleted the

folder before the beginning of May or after the middle of June. Or,

perhaps, it never existed. If I had online indexes going back far

enough, I could answer all questions in a few minutes. Without them,

I'm up for really long tape reads. My job is not about determining

where the truth lies, it's about recovering the emails.

I wrote to Gordon, explaining all this and added:

So, before I embark on a huge waste of time for both of us, I

suggest we have a little chat about exactly when you think you

deleted the folder, and exactly what its name is.

I suppose we had the discussion. A day or two later he wrote again:

Here's another thought, that I hope will save work rather than making

more.

I didn't notice that I was missing any mail until about 1

September. At that time I went to my mailbox

Assoc/AQERA-admin, which should have in principle contained

hundreds of emails, but actually contained only one.

If there any recent backup in which Assoc/AQERA-admin contains

more than one or two emails, then those are the emails I'm

after.

And that was all I needed!

Stupidly, I followed the suggestion in his last paragraph - and drew a

blank. But then I went back to the failed disk and searched for

Assoc/AQERA-admin (rather than the elusive Assoc/AQERA).

I have found a place with the above directory which contains

843 emails dating back to 2003. I have restored it to your

mailbox as Assoc/AQERA-admin-May21 (in your notation).

I hope this helps.

And this is how I know my customers love me:

Fantastic!!

That's everything I was after. Recovering this mail will make

my job as AQERA president much easier over the next year.

Many thanks

Gordon

It's why I put up with the "lies" and inadvertent misdirections. I

have an overwhelming need to be appreciated. At heart, I'm still at

school craving any sign of the teacher's approval. It may have made me

a superb student. But what does it say for my self-esteem? Who's the

more together human being? Me? Or my son, whom I tried to shame into

doing better? He didn't care tuppence for his teacher's approval. Or

mine. And yet, here he is, doing third-year Mechatronics Engineering

at uni.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

Installing/Configuring/Caching Django on your Linux server

By Anderson Silva and Steve 'Ashcrow' Milner

In today's world, web development is all about turnaround. Businesses want to

maximize production outcome while minimizing development and production time.

Small, lean development teams are increasingly becoming the norm in large

development departments. Enter Django:

a popular Python web framework

that invokes the RWAD (rapid web application development) and DRY (don't repeat

yourself) principles with clean, pragmatic design.

This article is not about teaching you how to program in Python, nor how to use

the Django framework. It's about showing how to promote your Django applications

onto an existing Apache or Lighttpd environment.

We will conclude with a simple way that you can improve the performance of your

Django application by using caching to speed up access time. This article also

assumes that you are running Fedora as your web application server, but all the

packages mentioned in this article are also available in many OS's including under the

Extra Packages for Enterprise Linux

repository, which means these instructions should also be valid under

Red Hat Enterprise Linux or CentOS servers.

What you need

You must have Django installed:

$ yum install Django

If you want to serve Django apps under Apache you will need

mod_wsgi:

$ yum install httpd mod_wsgi

If you want to serve Django apps under Lighttpd:

$ yum install lighttpd lighttpd-fastcgi python-flup

Installing memcached to 'speed up' Django apps:

$ yum install memcached python-memcached

Starting a new Django project

1. Create a development workspace.

$ mkdir -p $LOCATION_TO_YOUR_DEV_AREA

$ cd $LOCATION_TO_YOUR_DEV_AREA

2. Start a new base Django project. This creates the boiler plate project

structure.

$ django-admin startproject my_app

3. Start the Django development web server on port 8080 (or whatever other port

you'd like).

Note: The development web server is just for testing and verification. Do not

use it as a production application server!

$ python manage.py runserver 8080

4. Run your Django project under Apache with mod_wsgi by enabling mod_wsgi.

Note that to do this you will need to have your project in an SELinux

friendly location (don't use home directories!) as well as readable by apache.

On Fedora mod_wsgi is auto added upon install via /etc/httpd/conf.d/wsgi.conf.

Upon restarting of apache, the module will be loaded.

5. Create virtual hosts by creating a new file at

/etc/httpd/conf.d/myapp.conf.

WSGIScriptAlias / /path/to/myapp/apache/django.wsgi

DocumentRoot /var/www/html/

ServerName your_domain_name

ErrorLog logs/my_app-error.log

CustomLog logs/my_app-access_log common

6. In step 5 we defined a script alias and now we need to create the wsgi file

it references.

import os

import sys

import django.core.handlers.wsgi

# Append our project path to the system library path

sys.path.append('/path/to/')

# Sets the settins module so Django will work properly

os.environ['DJANGO_SETTINGS_MODULE'] = 'myapp.settings'

# sets application (the default wsgi app) to the Django handler

application = django.core.handlers.wsgi.WSGIHandler()

Running your Django project under Lighthttpd with fastcgi

The first thing you must do is start up your FastCGI server.

./manage.py runfcgi method=prefork socket=/var/www/myapp.sock pidfile=django_myapp.pid

Then modify your lighttpd.conf file to use the FastCGI server.

server.document-root = "/var/www/django/"

fastcgi.server = (

"/my_app.fcgi" => (

"main" => (

# Use host / port instead of socket for TCP fastcgi

# "host" => "127.0.0.1",

# "port" => 3033,

"socket" => "/var/www/my_app.sock",

"check-local" => "disable",

)

),

)

alias.url = (

"/media/" => "/var/www/django/media/",

)

url.rewrite-once = (

"^(/media.*)$" => "$1",

"^/favicon\.ico$" => "/media/favicon.ico",

"^(/.*)$" => "/my_app.fcgi$1",

)

Setting up caching in Django

Django has many different caching backends, including database, memory,

filesystem, and the ever popular memcached. According to

http://www.danga.com/memcached/,

memcached is "a high-performance, distributed memory object caching system,

generic in nature, but intended for use in speeding up dynamic web applications

by alleviating database load." It's used by high traffic sites such as

Slashdot and

Wikipedia. This makes it a prime candidate

for caching in your cool new web app.

First, install memcached.

$ yum install memcached

Next, install the python bindings for memcached.

$ yum install python-memcached

Next, verify that memcached is running using the memcached's init script.

$ /etc/init.d/memcached status

memcached (pid 6771) is running...

If it's not running, you can manually start it.

$ /sbin/service memcached start

If you want to make sure it will automatically start every time after a reboot:

$ /sbin/chkconfig --level 35 memcached on

Now that you have verified that memcached is running, you will want to tell

your Django application to use memcached as it's caching backend. You can do

this by adding a CACHE_BACKEND entry to your settings.py file.

CACHE_BACKEND = 'memcached://127.0.0.1:11211/'

The format is "backend://host:port/" or "backend:///path" depending on the

backend chosen. Since we are using memcached, we have the option to run multiple

daemons on different servers and share the cache across multiple machines. If

you want to do this all you must do is add in the servers:port combinations in

the CACHE_BACKEND and separate them by semicolons. In this example we share the

cache across three different memcached servers:

CACHE_BACKEND = 'memcached://127.0.0.1:11211;192.168.0.10:11211;192.168.0.11/'

For more information on the different types of caching that can be performed

in the Django framework, please refer to their

official documentation.

Finally, whenever you are ready to get your applications into production,

you can always write your own Django

service script,

so your application can start up at boot time.

The original article was published on June 5th, 2008 by Red Hat Magazine,

and revised for the 2010 November Issue of Linux Gazette.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/silva.jpg)

Anderson Silva works as an IT Release Engineer at Red Hat, Inc. He holds a

BS in Computer Science from Liberty University, a MS in Information Systems

from the University of Maine. He is a Red Hat Certified Architect and has

authored several Linux based articles for publications like: Linux Gazette,

Revista do Linux, and Red Hat Magazine. Anderson has been married to his

High School sweetheart, Joanna (who helps him edit his articles before

submission), for 11 years, and has 3 kids. When he is not working or

writing, he enjoys photography, spending time with his family, road

cycling, watching Formula 1 and Indycar races, and taking his boys karting,

![[BIO]](../gx/2002/note.png)

Steve 'Ashcrow' Milner works as a Security Analyst at Red Hat, Inc. He

is a Red Hat Certified Engineer and is certified on ITIL Foundations.

Steve has two dogs, Anubis and Emma-Lee who guard his house. In his

spare time Steve enjoys robot watching, writing open code, caffeine,

climbing downed trees and reading comic books.

Ten Tips to Harden Your Website

By Sander Temme

Sander will be presenting “Hardening Enterprise Apache Installations

Against Attacks” and is Apache HTTP Server Track Chair at ApacheCon, the ASF’s official

conference, training, and expo, taking place 1-5 November 2010 in Atlanta,

Georgia.

Introduction

Websites are under continuous attack. Defacement, malware insertion and

data theft are but a few of motivators for the attackers, and anyone who

runs an Internet-facing website should be prepared to defend themselves.

Website owners have many tools at their disposal with which to thwart

online attackers; entire books have been written about this

field. Here are ten tips that will quickly improve the security of your

website.

1. Harden your Operating System

The default installation of your server operating system likely comes

with a number of features you would not use. Turn off operating system

services that are not used by your web application. Be especially wary of

running anything that exposes a network listener (a network listener is a

system task that listens on a given network port for incoming client

connections). Uninstall software

packages you do not use, or opt not to install them in the first

place: the smaller your software installation, the smaller the attack

surface. Use the extra security features offered by your operating system

like SELinux or BSD securelevel. For instance, default SELinux

configuration on Red Hat Enterprise Linux strikes a good balance where

server programs that listen on the network are locked down tightly (which

makes sense), but regular system maintenance utilities have the run of the

system (because locking them down would be very complex to configure and

maintain).

2. Use Software and Operating System that You can Maintain

The idea that one vendor’s operating system is inherently more secure

than any others is largely a myth. You do not achieve increased security

just by picking an operating system and then forgetting about it. Every

operating system environment is subject to vulnerabilities, which get fixed

and patched over time. Run your website on an operating system that you and

your organization are comfortable maintaining and patching: a well

maintained, up-to-date Windows server may well be more secure than a stale,

unmaintained Linux installation. Your task is to maintain and defend your

website, not to engage in a religious war of one operating system versus

another.

3. Slim Down your Server Software

When first installed, the default configuration of server software is

often intended to be a feature showcase, rather than an Internet facing

deployment. Turn off unused Apache modules. Slim down your server

configuration file: only include configuration directives for functionality

that you actually use. Remove sample code, default accounts and manual

pages: install those on your development system. Turn off all error

reporting to the browser. A lean server configuration is easier to manage,

and presents attackers with less opportunity.

4. Access your Management Interface over SSL

Many website environments and Content Management Systems are managed

through an interface that is part of the website itself. Consider running

this part of your site over SSL to protect login credentials and other

sensitive data. An SSL-enabled website is protected by a cryptographic key

and certificate that identify the server. You do not need to buy an

expensive certificate to do this: generate your own certificate and add it to the

trusted certificate store of your browser: as website manager, you are the

only one who needs to trust your own certificate. Content Management

Systems like Wordpress allow you to enable SSL on the management interface

with a few configuration settings. Encrypting management connections with

SSL is an effective way to protect your all-important login credentials.

5. White-list Allowed Actions, Blacklist Attacks

As site manager, you should know which legitimate HTTP

operations users can perform on your site. Ideally, you should treat any

other action as hostile, and deny it. Unfortunately, this is not always

feasible or politically achievable: you may have inherited an environment

in which all the valid URL and operation combinations are simply unknown,

and aggressive restrictions may interfere with business operations. On the

Apache web server, you can use a Web Application Firewall like ModSecurity

by Ivan Ristic to allow and disallow access to particular URLs and actions.

Other web server software packages provide similar features. If you are

unable to white-list allowed actions, you should be able to blacklist

attacks as you see them happen.

6. Use a Firewall

After a web application is compromised, the attackers will often attempt

to have the application download additional malware. Or, installed malware

may connect to a command-and-control server hosted elsewhere. Generally, a

web application should only respond to incoming requests, and not be

allowed to make outgoing TCP connections. This makes server maintenance a

little more cumbersome, but it is a small price to pay for stopping

attackers’ operations.

7. Keep your Software Up-to-date

Vulnerabilities are discovered on a regular basis in

every operating system and on all application platforms. Fortunately, once

discovered, they are usually fixed reasonably swiftly. Be sure to keep your

system and software up-to-date and install all vendor patches.

8. Peruse your Logs

Your web server will log every request it handles, and will note any

errors that occur. An attempt to attack your web server will likely leave a

rash of error messages in your server logs, and you should monitor those

logs on a regular basis to see how you are being attacked. Log monitoring

can also reveal URLs and operations that initiate attacks and should be

blacklisted.

9. Prevent Writing to Document Root

Your website might serve two types of content: content that you put

there, and content that someone else puts there unbeknownst to you. The

Internet-facing network listener that provides access to your application

is its lifeline: without it your application would not be usable. However,

it is also an attack vector. Through specially crafted HTTP requests,

attackers will probe vulnerabilities in your application and configuration.

They will try to upload content or scripts to your website: if they can get

a PHP script uploaded and call it through the web server, it runs with the

same privileges as the web server program and can likely be used to wreak

havoc.

Content Management Systems like Joomla! and Wordpress allow site

managers to upload and update plug-ins, and in some cases, to configure the

server-side software through a web browser. This makes these packages

really easy to use, but the drawback is that the web server program must be

able to write to the server directories that contain the software. This

capability is easily abused by intruders. Never allow the web server

program to write to directories within the URL space of the site, where

uploaded content can be served and possibly called as interpreted scripts.

Instead, upload your software and plug-ins through an out-of-band mechanism

like SSH or SFTP.

10. Use One Time Passwords

Abuse of login credentials is often a more successful attack method than

exploitation of software vulnerabilities. Active Internet users often have

dozens of User IDs and passwords on dozens of different websites. Expecting

users to follow the recommendations concerning password strength and change

frequency is unrealistic.

However, if there is one site for which you use strong passwords that

you change on a regular basis, make it your own website management

interface.

An even better way to protect login credentials is through use of One

Time Passwords. This method lets you calculate a new password for every

time you log into your site management interface, based on a challenge sent

by the site and a PIN that only you know. Programs to calculate One Time

Passwords are available for all operating systems, including smart phones,

so you can carry your OTP generator with you and separate it from the

network interaction with your website. Since an OTP can never be used

twice, this technique makes your website management interface immune to

keystroke loggers and network sniffers: even if your login information is

sniffed or captured, the attackers cannot re-use it.

Conclusion: Use “The CLUB™”

The CLUB™ is a lock that you put on the steering wheel of your car as you

park it. It is inexpensive and clearly visible to anyone who might consider

breaking into your car and stealing it. In addition, it will encourage

miscreants to skip your car and move on to a car that does not have a

visible security device. Making your own car a less attractive target is a

highly effective security control technique.

There is a clear analogy to the CLUB™ for defending your website: with

a few relatively simple measures like the ones described above, you can

thwart the scripts that automatically scan the web for vulnerable sites,

and have their owners focus their attention on the many, many websites that

lack basic protection.

Of course basic protective measures do not absolve you entirely: your

website is likely up 24/7 and unlike car thieves, who operate in a public

place, web attackers have all the time in the world to quietly pick away at

the locks on your website until they find a weakness they can exploit. As a

webmaster, you need to plug all the holes, but the villains need to find

only one leak. Therefore, your continued vigilance is required and the tips discussed

above should give you a good start.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/temme.jpg)

Sander Temme is an Enterprise Solutions Engineer for Thales E-security,

whose clients include Fortune 500 companies, financial services

companies and government agencies. He is a member of the Apache Software

Foundation and is active in the httpd, Infrastructure and Gump projects.

Copyright © 2010, Sander Temme. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

HelpDex

By Shane Collinge

These images are scaled down to minimize horizontal scrolling.

Flash problems?

All HelpDex cartoons are at Shane's web site,

www.shanecollinge.com.

Talkback: Discuss this article with The Answer Gang

Part computer programmer, part cartoonist, part Mars Bar. At night, he runs

around in his brightly-coloured underwear fighting criminals. During the

day... well, he just runs around in his brightly-coloured underwear. He

eats when he's hungry and sleeps when he's sleepy.

Part computer programmer, part cartoonist, part Mars Bar. At night, he runs

around in his brightly-coloured underwear fighting criminals. During the

day... well, he just runs around in his brightly-coloured underwear. He

eats when he's hungry and sleeps when he's sleepy.

Copyright © 2010, Shane Collinge. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

Ecol

By Javier Malonda

The Ecol comic strip is written for escomposlinux.org (ECOL), the web site that

supports es.comp.os.linux, the Spanish USENET newsgroup for Linux. The

strips are drawn in Spanish and then translated to English by the author.

These images are scaled down to minimize horizontal scrolling.

All Ecol cartoons are at

tira.escomposlinux.org (Spanish),

comic.escomposlinux.org (English)

and

http://tira.puntbarra.com/ (Catalan).

The Catalan version is translated by the people who run the site; only a few

episodes are currently available.

These cartoons are copyright Javier Malonda. They may be copied,

linked or distributed by any means. However, you may not distribute

modifications. If you link to a cartoon, please notify Javier, who would appreciate

hearing from you.

Talkback: Discuss this article with The Answer Gang

Copyright © 2010, Javier Malonda. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

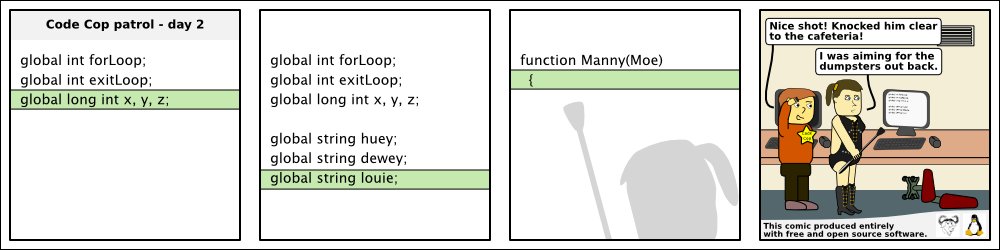

XKCD

By Randall Munroe

More XKCD cartoons can be found

here.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/2002/note.png)

I'm just this guy, you know? I'm a CNU graduate with a degree in

physics. Before starting xkcd, I worked on robots at NASA's Langley

Research Center in Virginia. As of June 2007 I live in Massachusetts. In

my spare time I climb things, open strange doors, and go to goth clubs

dressed as a frat guy so I can stand around and look terribly

uncomfortable. At frat parties I do the same thing, but the other way

around.

Copyright © 2010, Randall Munroe. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

Doomed to Obscurity

By Pete Trbovich

These images are scaled down to minimize horizontal scrolling.

All "Doomed to Obscurity" cartoons are at Pete Trbovich's site,

http://penguinpetes.com/Doomed_to_Obscurity/.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/2002/note.png)

Born September 22, 1969, in Gardena, California, "Penguin" Pete Trbovich

today resides in Iowa with his wife and children. Having worked various

jobs in engineering-related fields, he has since "retired" from

corporate life to start his second career. Currently he works as a

freelance writer, graphics artist, and coder over the Internet. He

describes this work as, "I sit at home and type, and checks mysteriously

arrive in the mail."

He discovered Linux in 1998 - his first distro was Red Hat 5.0 - and has

had very little time for other operating systems since. Starting out

with his freelance business, he toyed with other blogs and websites

until finally getting his own domain penguinpetes.com started in March

of 2006, with a blog whose first post stated his motto: "If it isn't fun

for me to write, it won't be fun to read."

The webcomic Doomed to Obscurity was launched New Year's Day,

2009, as a "New Year's surprise". He has since rigorously stuck to a

posting schedule of "every odd-numbered calendar day", which allows him

to keep a steady pace without tiring. The tagline for the webcomic

states that it "gives the geek culture just what it deserves." But is it

skewering everybody but the geek culture, or lampooning geek culture

itself, or doing both by turns?

Copyright © 2010, Pete Trbovich. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 180 of Linux Gazette, November 2010

Linux Foundation User Survey Shows Enterprise Gains

Linux Foundation User Survey Shows Enterprise Gains

Ubuntu 10.10 Released

Ubuntu 10.10 Released Puppy Linux 5.1 released

Puppy Linux 5.1 released Ubuntu Rescue Remix 10.10 Released

Ubuntu Rescue Remix 10.10 Released Novell Delivers SUSE Linux Enterprise Server for VMware

Novell Delivers SUSE Linux Enterprise Server for VMware Kanguru Hardware Encrypted USB Flash Drives for Linux

Kanguru Hardware Encrypted USB Flash Drives for Linux

![[BIO]](../gx/authors/dokopnik.jpg)

![[BIO]](../gx/authors/grebler.jpg)

![[BIO]](../gx/authors/silva.jpg)

![[BIO]](../gx/2002/note.png)

![[BIO]](../gx/authors/temme.jpg)

![[cartoon]](misc/ecol/tiraecol_en-391.png)

![And what about all the people who won't be able to join the community because they're terrible at making helpful and constructive co-- ... oh.

[cartoon]](misc/xkcd/constructive.png)